How Do Autonomous Vehicles Sensors Shape Modern Mobility?

Blog Summary:

Autonomous vehicles rely on advanced sensor systems to perceive their surroundings, navigate accurately, and operate safely without human input. From object detection and environmental awareness to real-world applications and challenges, sensors play a central role in enabling autonomous mobility. As sensor technologies and data-driven systems evolve, they continue to shape the future of intelligent and connected transportation.

Autonomous vehicles are no longer a futuristic concept—they are steadily becoming part of real-world transportation systems. At the core of this transformation lies a sophisticated network of sensing technologies that allow vehicles to perceive, interpret, and respond to their surroundings without human intervention.

These sensors act as the vehicle’s eyes, ears, and spatial awareness system, continuously collecting data from the environment.

Autonomous Vehicles Sensors enable vehicles to understand road conditions, detect objects, recognize traffic elements, and make informed driving decisions in real time.

Unlike conventional vehicles, which rely primarily on human judgment, autonomous systems operate entirely on sensor-driven intelligence to ensure safe, efficient performance. As autonomy levels increase, the accuracy, reliability, and coordination of these sensors become even more critical.

This blog explores how autonomous vehicle sensors function, the different types involved, the challenges they face, and their real-world applications. It also highlights how advanced data-driven approaches are shaping the future of sensor-enabled autonomous mobility.

What Are Autonomous Vehicle Sensors?

Autonomous vehicle sensors are specialized hardware components that collect real-time data on a vehicle’s surroundings, internal motion, and positioning. These sensors continuously monitor the environment and convert physical signals—such as light, sound, distance, and movement—into digital data that onboard systems can analyze and act upon instantly.

In an autonomous driving system, sensors do far more than just detect obstacles. They help the vehicle understand road geometry, identify moving and stationary objects, estimate distances, track speed, and determine precise location.

This sensor-generated data feeds perception, localization, and decision-making algorithms, enabling the vehicle to drive with minimal or no human involvement.

Autonomous Vehicles Sensors work together as a unified sensing ecosystem rather than in isolation. Each sensor type has strengths and limitations, which is why modern autonomous vehicles rely on sensor fusion—combining data from multiple sources to achieve a more accurate and reliable understanding of the driving environment.

This layered sensing approach ensures redundancy, improves accuracy, and enhances safety across diverse driving scenarios.

Why Sensors Are Critical for Autonomous Vehicles?

Sensors are the foundation of autonomous driving systems, enabling vehicles to perceive, interpret, and respond to their surroundings without human input.

Every driving decision—whether it’s slowing down, changing lanes, or stopping—is based on data collected and processed by sensors in real time. Without reliable sensing, autonomy cannot function safely or consistently.

Environmental Perception

Autonomous vehicles must maintain constant awareness of their surroundings to operate effectively. Sensors capture detailed information about nearby vehicles, pedestrians, road structures, traffic signals, and obstacles.

This real-time environmental perception allows the vehicle to build a dynamic map of its surroundings and continuously update it as conditions change. Accurate perception is essential for understanding complex driving environments, including intersections, highways, and urban streets.

Safety and Collision Avoidance

One of the most critical roles of sensors is preventing accidents. By continuously monitoring distances, relative speeds, and object trajectories, sensors help identify potential collision risks before they escalate.

Autonomous systems use this data to trigger immediate responses such as emergency braking, evasive steering, or speed adjustments. The reliability of autonomous vehicles’ sensors directly affects how effectively they protect passengers, pedestrians, and other road users.

Navigation and Localization

Autonomous vehicles must know their exact location at all times. Sensors support precise localization by tracking a vehicle’s position, orientation, and motion relative to its surroundings.

This information is essential for following planned routes, maintaining lane discipline, executing turns, and complying with road rules. Accurate navigation ensures that vehicles stay aligned with digital maps and adapt to real-world conditions.

Handling Diverse Conditions

Road environments are unpredictable and constantly changing. Sensors help autonomous vehicles adapt to variations in traffic density, road layouts, weather, and lighting conditions.

Whether driving in heavy rain, low light, or crowded urban areas, sensor systems provide the data needed to maintain operational reliability. This adaptability is key to making autonomous driving viable across different geographies and use cases.

Drive Innovation in Autonomous Mobility

Build reliable and scalable vehicle systems powered by advanced sensing and data.

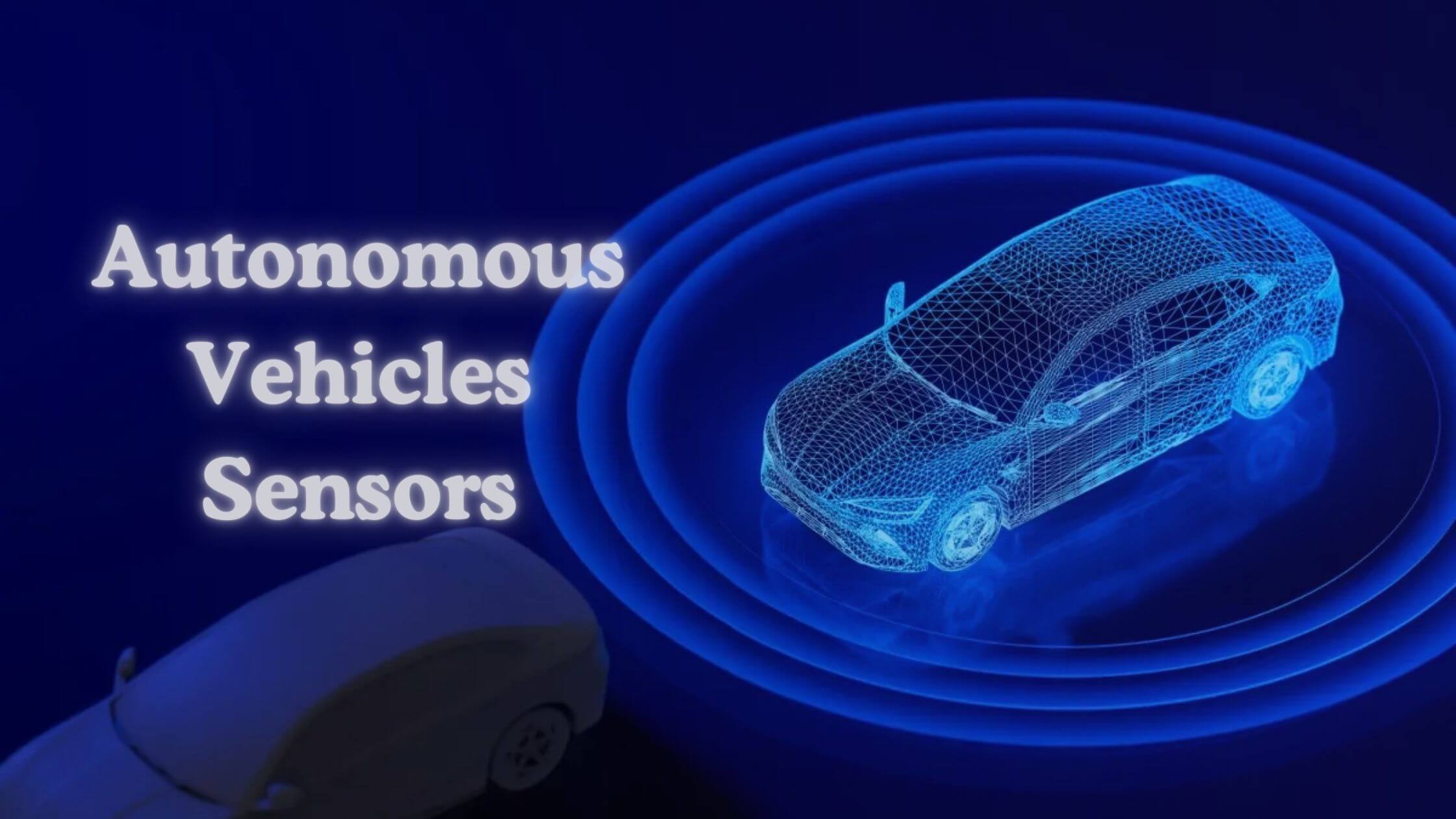

Types of Sensors Used in Autonomous Vehicles

Autonomous driving systems rely on a diverse set of sensors, each designed to capture specific types of information about the vehicle and its surroundings. No single sensor can provide complete environmental awareness, which is why autonomous vehicles use multiple sensor modalities that work together through sensor fusion.

This layered approach improves accuracy, reliability, and decision-making across different driving scenarios.

Core Perception Sensors

Core perception sensors are responsible for understanding what is happening around the vehicle. They detect objects, recognize road elements, and interpret the driving environment in real time.

Cameras, radar, and LiDAR fall into this category and are used to identify vehicles, pedestrians, traffic signs, lanes, and obstacles. These sensors provide the raw data needed for object detection, classification, and scene understanding.

By combining visual and distance-based inputs, perception sensors allow autonomous systems to accurately assess road conditions and predict how surrounding objects may move.

Localization & Motion Sensors

Localization and motion sensors focus on understanding the vehicle’s position and movement rather than the external environment. These sensors track speed, direction, acceleration, and orientation, helping the vehicle determine its position and motion at any given moment.

This information is critical for route planning, lane positioning, and maintaining stability during maneuvers.

Together, these sensors ensure that the vehicle’s internal awareness aligns with its perception of the outside world.

You Might Also Like:

IoT in Transportation: 8 Ways It’s Shaping the Next Era of Mobility

LiDAR (Light Detection and Ranging)

LiDAR sensors use laser pulses to measure distances by calculating how long it takes for light to reflect from surrounding objects. This creates highly accurate, three-dimensional representations of the environment.

LiDAR is especially effective at detecting object shapes, sizes, and positions, making it valuable for complex driving environments such as intersections and urban areas.

Its ability to generate precise depth information is critical to enabling autonomous vehicle sensors to support safe navigation and obstacle avoidance.

GPS/GNSS

GPS and other global navigation satellite systems provide absolute positioning data that helps autonomous vehicles understand their geographic location.

While GPS alone may not always be precise enough for lane-level navigation, it becomes highly effective when combined with other sensors. This integration allows vehicles to follow routes, align with digital maps, and adjust to changes in road layouts.

Ultrasonic Sensors

Ultrasonic sensors are short-range sensors commonly used for close-proximity detection. They emit sound waves to measure distances to nearby objects, making them ideal for low-speed scenarios such as parking, tight turns, and obstacle detection in confined spaces.

These sensors add an extra layer of safety by detecting objects that may not be visible to cameras or other long-range sensors.

Autonomous Vehicles Sensors vs Traditional Vehicle Sensors

The sensor systems used in autonomous vehicles differ significantly from those found in traditional vehicles. While conventional vehicles rely on sensors for basic assistance and driver alerts, autonomous vehicles depend on advanced, multi-layered sensor ecosystems to operate independently.

The table below highlights the key differences across critical parameters –

| Aspect | Autonomous Vehicles Sensors | Traditional Vehicle Sensors |

|---|---|---|

| Purpose | Enable full or partial self-driving by perceiving, analyzing, and responding to the environment in real time | Assist the driver with alerts, warnings, or limited automation |

| Level of Automation | Designed for high levels of automation with minimal or no human intervention | Supports low-level automation with constant driver involvement |

| Sensor Types Used | Combines LiDAR, radar, cameras, GPS/GNSS, ultrasonic, and motion sensors | Typically includes basic cameras, parking sensors, and simple radar |

| Environment Awareness | Creates a detailed, real-time understanding of surroundings using sensor fusion | Offers limited awareness focused on specific tasks |

| Data Processing | Processes large volumes of sensor data using advanced algorithms and real-time analytics | Handles relatively small data sets with predefined logic |

| Safety Capabilities | Proactively predicts risks and executes autonomous safety actions | Reacts to hazards by alerting the driver |

| Decision-Making | Makes driving decisions independently based on sensor input | Leaves final decision-making to the human driver |

| Accuracy and Precision | Requires high precision for lane-level navigation and obstacle avoidance | Precision requirements are lower and task-specific |

| Integration Complexity | Highly complex integration across hardware, software, and data systems | Comparatively simple and isolated sensor integration |

| Cost and Maintenance | Higher initial cost and specialized maintenance requirements | Lower cost and easier maintenance |

This comparison highlights why advanced sensor architectures are essential for autonomy, while traditional vehicles continue to rely on simpler sensing mechanisms for driver assistance.

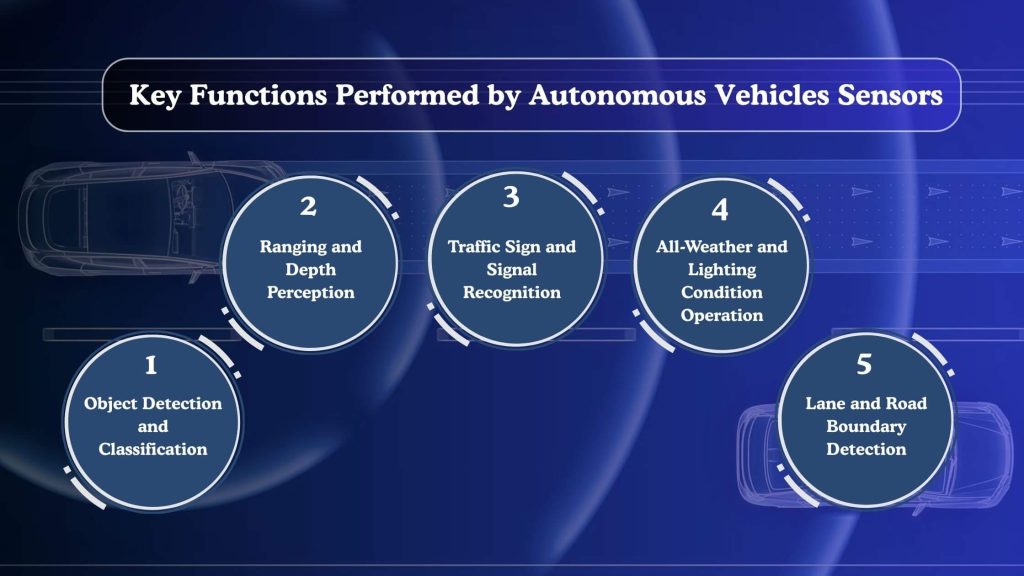

Key Functions Performed by Autonomous Vehicles Sensors

Autonomous vehicle sensors convert raw environmental and motion data into actionable intelligence.

These functions enable vehicles to perceive their surroundings, assess risks, and make real-time driving decisions without human input. Each function plays a crucial role in ensuring safety, efficiency, and reliability across different driving scenarios.

Object Detection and Classification

Sensors continuously identify and classify objects around the vehicle, including pedestrians, vehicles, cyclists, and roadside structures. By distinguishing between different object types, the system can predict behavior patterns and respond appropriately.

Accurate classification allows autonomous vehicles to prioritize safety-critical objects and adjust driving strategies accordingly.

Ranging and Depth Perception

Understanding how far objects are from the vehicle is essential for safe navigation. Sensors measure distance and relative position to determine safe following distances, overtaking opportunities, and stopping distances.

Depth perception enables smooth acceleration, braking, and steering by providing precise spatial awareness in both dynamic and static environments.

Traffic Sign and Signal Recognition

Autonomous vehicles must interpret traffic rules to operate legally and safely. Sensors, particularly vision-based systems, detect and recognize traffic signs, signals, and road markings in real time.

This information helps the vehicle comply with speed limits, traffic lights, stop signs, and other regulatory elements without relying solely on preloaded maps.

All-Weather and Lighting Condition Operation

Driving conditions are not always ideal. Sensors support operation in varying weather and lighting environments, including rain, fog, low light, and nighttime driving.

By combining data from multiple sensor types, autonomous systems maintain situational awareness even when one sensor’s performance is degraded, strengthening the overall reliability of Autonomous Vehicle sensors.

Lane and Road Boundary Detection

Sensors detect lane markings, curbs, road edges, and barriers to keep the vehicle properly aligned within its driving path. This function is essential for lane keeping, lane changes, and safe navigation through complex road layouts.

Continuous lane detection ensures stability and reduces the risk of unintended lane departures.

Exploring Autonomous or Connected Vehicle Solutions?

We translate complex sensing and data challenges into practical implementations.

Challenges Associated with Autonomous Vehicles Sensors

Despite rapid advancements, sensor systems used in autonomous vehicles still face several technical and operational challenges.

These challenges can affect perception accuracy, system reliability, and overall driving safety if not properly addressed through design, testing, and data-driven optimization.

Weather & Lighting

Environmental conditions remain a major challenge for sensor performance. Heavy rain, fog, snow, and glare from sunlight can reduce visibility and interfere with sensor readings.

Cameras may struggle in low-light situations, while LiDAR and radar can experience signal distortion in adverse weather. Ensuring consistent performance across diverse conditions requires intelligent sensor fusion and continuous calibration.

Accuracy & Reliability

Autonomous driving demands extremely high accuracy. Even minor errors in sensor data can lead to incorrect interpretations of the environment. Sensors must deliver precise, reliable data over extended periods while operating under constant motion and vibration.

Maintaining consistency across different road types, traffic scenarios, and speeds is a key challenge for large-scale deployment.

Interference & False Readings

Sensors can be affected by interference from external sources, including reflective surfaces, nearby vehicles, and electronic signals. False positives and negatives may cause the system to misinterpret objects or road conditions.

Managing sensor noise and filtering out unreliable data are essential to ensure autonomous vehicle sensors provide reliable inputs for decision-making systems.

Cybersecurity & Vulnerabilities

As sensors are deeply integrated with software and connectivity frameworks, they introduce potential cybersecurity risks. Unauthorized access, data manipulation, or signal spoofing can compromise sensor integrity and vehicle behavior.

Protecting sensor data pipelines and ensuring secure communication between components is critical for maintaining trust in autonomous systems.

Real-World Applications of Autonomous Vehicle Sensors

Autonomous vehicle sensors are already delivering tangible value across multiple real-world scenarios.

By enabling continuous perception, precise navigation, and intelligent decision-making, these sensors support safer operations and more efficient mobility systems in both consumer and commercial use cases.

Enhanced Safety and Collision Avoidance

Sensor-driven perception systems actively monitor surrounding traffic and predict potential hazards before they become critical. By tracking object motion, speed differences, and proximity, vehicles can take preventive actions, such as controlled braking or steering adjustments.

This proactive approach significantly reduces the likelihood of accidents, especially in high-density traffic and complex urban environments.

Environmental Monitoring

Sensors also help assess environmental conditions beyond immediate driving needs. Data on road surfaces, weather changes, visibility, and traffic patterns can be collected and analyzed to improve route planning and operational strategies.

This environmental awareness supports smoother driving behavior and helps vehicles adapt to changing conditions in real time.

Advanced Navigation and Route Planning

Accurate sensor data enables vehicles to follow optimal routes while accounting for real-world constraints, including road geometry, lane availability, and dynamic obstacles.

By continuously updating position and surroundings, autonomous systems can reroute efficiently, avoid congested areas, and maintain compliance with traffic rules. This capability is essential for reliable long-distance and urban navigation.

Parking and Low-Speed Operations

Low-speed scenarios such as parking, maneuvering in tight spaces, and navigating crowded areas rely heavily on short-range sensing.

Sensors detect nearby objects, curbs, and boundaries with high precision, enabling smooth, safe movement without human assistance. These capabilities are particularly valuable in urban environments and structured parking facilities.

Fleet Management

In commercial and shared mobility use cases, sensor data supports fleet-level intelligence. Vehicles can report operational status, usage patterns, and environmental conditions to centralized systems for analysis.

This insight helps optimize routing, improve maintenance planning, and enhance overall fleet efficiency, making autonomous vehicle sensors a key enabler of scalable autonomous transportation solutions.

BigDataCentric’s Role in Advancing Autonomous Vehicle Sensors

Building reliable autonomous vehicle systems goes far beyond deploying sensors—it requires the ability to process, analyze, and act on massive volumes of real-time data. This is where BigDataCentric plays a critical role.

With deep expertise in data engineering, machine learning, and advanced analytics, BigDataCentric helps organizations digitally transform raw sensor data into actionable intelligence.

Autonomous vehicle ecosystems generate continuous streams of data from cameras, LiDAR, radar, and motion sensors. BigDataCentric designs scalable data pipelines that efficiently ingest, process, and manage high-frequency sensor data.

These pipelines ensure low-latency processing, which is essential for real-time perception, localization, and decision-making.

Another key area is sensor data fusion and analytics. BigDataCentric enables the integration of multi-sensor inputs into unified analytical models, improving accuracy and reliability across diverse driving conditions.

By applying advanced analytics and predictive modeling, organizations can enhance object detection, reduce false readings, and improve overall system robustness.

BigDataCentric also supports simulation, testing, and continuous optimization of sensor-driven systems. By leveraging historical and real-time data, autonomous platforms can be trained, validated, and refined to handle edge cases, environmental variability, and complex traffic scenarios more effectively. This data-centric approach helps accelerate innovation while maintaining safety and compliance standards.

Need Support for Autonomous Vehicle Development?

Our team supports end-to-end development, converting complex sensing data into practical, reliable mobility solutions.

A Final Word

Autonomous driving is fundamentally enabled by vehicles’ ability to sense, understand, and respond to their surroundings with precision and reliability. Sensors serve as the backbone of this capability, enabling continuous environmental awareness, intelligent decision-making, and safe navigation across diverse conditions.

As autonomous technologies mature, the importance of robust sensing systems becomes even more pronounced.

Autonomous Vehicles Sensors will continue to evolve in accuracy, integration, and resilience, supporting higher levels of automation and broader real-world adoption.

By combining advanced sensor technologies with strong data engineering and analytics foundations, organizations can build autonomous systems that are not only innovative but also safe, scalable, and future-ready.

FAQs

-

Are autonomous vehicle sensors safe and reliable for real-world use?

Autonomous vehicle sensors are designed with redundancy and sensor fusion to improve safety and reliability. While they perform well in many real-world conditions, continuous testing, validation, and monitoring are essential to handle edge cases and complex environments.

-

How do LiDAR and radar sensors differ in autonomous driving?

LiDAR provides high-resolution 3D mapping and precise object-shape detection, while radar excels at measuring distance and speed, even in adverse weather. Together, they complement each other to improve perception accuracy.

-

Are autonomous vehicle sensors compatible with smart city infrastructure?

Yes, these sensors can integrate with smart city systems through connectivity and data exchange. This compatibility supports traffic management, infrastructure monitoring, and more efficient urban mobility.

-

What innovations are expected in autonomous vehicle sensors in the next decade?

Future innovations include improved sensor accuracy, reduced hardware costs, better performance in adverse conditions, and deeper integration with real-time data and connected infrastructure systems.

Jayanti Katariya is the CEO of BigDataCentric, a leading provider of AI, machine learning, data science, and business intelligence solutions. With 18+ years of industry experience, he has been at the forefront of helping businesses unlock growth through data-driven insights. Passionate about developing creative technology solutions from a young age, he pursued an engineering degree to further this interest. Under his leadership, BigDataCentric delivers tailored AI and analytics solutions to optimize business processes. His expertise drives innovation in data science, enabling organizations to make smarter, data-backed decisions.

Table of Contents

Toggle- A strict non-disclosure policy.

- Get in discuss with our experts.

- Get a free consultation.

- Turn your idea into an exceptional app.

- Suggestions on revenue models & planning.

- No obligation proposal.

- Action plan to start your project.

- We respond to you within 8 hours.

- Detailed articulate email updates within 24 hours.

USA

500 N Michigan Avenue, #600,Chicago IL 60611