Learning Rate in Machine Learning: Key Concepts & Tips

Blog Summary:

Understanding how the learning rate shapes training can make or break a model’s performance. This guide explores how the Learning Rate in Machine Learning works, why it matters, and how modern strategies like decay schedules, adaptive methods, and meta-learning improve model stability. You’ll also discover real-world applications and how BigDataCentric applies optimized learning rate techniques across its solutions.

Training any model—whether a simple regression model or a large neural network—depends heavily on how effectively it learns from data. A major part of this learning process is governed by the Learning Rate in Machine Learning, a parameter that controls the step size during optimization.

When set correctly, it helps a model converge smoothly toward the desired outcome. When misconfigured, it can cause slow progress, oscillation, or complete failure to learn.

Modern systems that use a learning rate, especially in deep learning workflows or a learning rate neural network environment, require more than a single static value. Techniques such as decay schedules, adaptive adjustments, warm-ups, and cyclical patterns ensure that models learn efficiently while maintaining stability.

As training becomes more complex across domains such as healthcare, finance, vehicles, Natural Language Processing (NLP), and computer vision, the importance of a thoughtful learning rate strategy continues to grow.

This guide will cover everything from core concepts and real-world applications to modern learning rate strategies, practical implementation steps, and how optimized learning rates impact different machine learning domains.

What is the Learning Rate in Machine Learning?

The learning rate is a fundamental hyperparameter that determines how quickly a model updates its weights during training. The gradient guides each update—the direction and magnitude of change required to minimize the loss function.

The learning rate controls the size of this adjustment. A small value results in slow, steady learning, while a high value pushes the model to take larger steps toward the minimum.

In most optimization algorithms, including gradient descent and its variants, the learning rate acts as the scaling factor for each weight update. If it’s too high, the updates may overshoot the optimal point, leading to divergence or unstable behavior.

If it’s too low, the model may take an excessively long time to converge or get stuck in local minima.

In deep learning environments where models contain millions of parameters, tuning the learning rate becomes even more critical. The right setting ensures smoother convergence, improved generalization, and better overall training efficiency.

Whether you’re working with a learning rate ML pipeline or a learning rate neural network, selecting and adjusting the correct value directly influences the model’s performance and reliability.

Where is Learning Rate Headed?

The role of the learning rate has evolved significantly as models have grown more complex and data volumes continue to expand. Traditional fixed values are no longer sufficient for modern workloads, especially in deep learning rate schedules where dynamics shift throughout training.

The direction of learning rate innovation now focuses on making adjustments smarter, faster, and more context-aware.

Below are the major trends shaping the future of learning rate strategies-

Real-time Adjustment

Real-time learning rate adjustment is becoming a standard approach in advanced training pipelines. Instead of relying on predefined schedules, models now adjust their step size based on training behaviour.

When progress slows or gradients become unstable, the learning rate adapts instantly. This helps avoid stagnation and supports more efficient convergence without manual tuning.

Exploratory Cycles

Exploratory cycling introduces controlled fluctuations in the learning rate during training. By intentionally increasing and decreasing its value across phases, models avoid getting stuck in local minima.

This technique is particularly useful in high-dimensional neural network settings with a rugged loss landscape. Cyclical methods help the optimizer explore more freely before settling into stable learning.

Auto-tuning & NAS

Auto-tuning algorithms and Neural Architecture Search (NAS) systems increasingly treat the learning rate as a tunable component. Automated systems test different learning rate patterns, evaluate performance, and select strategies that yield the most stable and efficient training results.

This reduces manual trial-and-error and ensures more consistent outcomes across diverse datasets and architectures.

Meta-learning Trends

Meta-learning approaches go a step further by teaching models how to learn. Instead of manually adjusting parameters, meta-learning frameworks enable models to infer optimal learning rate behaviours from prior training experiences.

Over time, they adapt more quickly and efficiently to new tasks, making them ideal for scenarios that involve frequent retraining or domain shifts.

You Might Also Like:

How Is Inference in Machine Learning Changing Modern Industries?

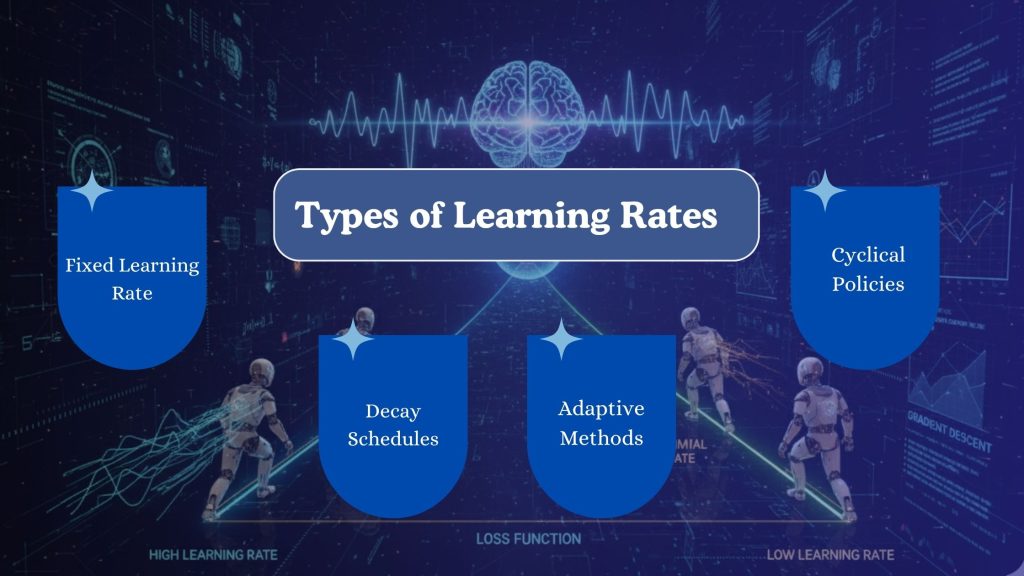

Types of Learning Rates

Different training scenarios require different approaches to controlling the learning rate. Instead of relying on a single fixed value, modern workflows use structured techniques that adapt as training progresses.

These methods help maintain stability, prevent overshooting, and improve overall convergence efficiency—especially in deep learning learning rate environments where model behaviour shifts across epochs.

Fixed Learning Rate

A fixed learning rate keeps the step size constant throughout training. It’s simple to implement and works well for smaller models or problems with predictable loss landscapes. However, in large-scale neural network setups with learning rates, a fixed value may limit performance.

If the rate is too high, training becomes unstable; if too low, it slows down significantly. Despite its limitations, fixed rates remain a baseline strategy in many workflows.

Decay Schedules

Decay schedules gradually reduce the learning rate over time. Early in training, large steps help the model learn quickly. As it approaches the minimum, smaller steps support fine-tuning.

Common decay strategies include step, exponential, and polynomial decay. This approach is widely used in learning rate ML pipelines because it strikes a balance between speed and precision.

Adaptive Methods

Adaptive learning rate methods automatically adjust the step size based on gradient behaviour. Algorithms like Adam, RMSProp, and Adagrad adjust the learning rate per parameter, making them highly effective for deep learning scenarios.

They help stabilize noisy gradients, accelerate training, and improve performance on complex datasets.

Cyclical Policies

Cyclical learning rate policies oscillate between lower and upper bounds during training. Instead of decreasing monotonically, the learning rate periodically rises and falls. This prevents the model from settling too early and encourages exploration of different regions of the loss landscape.

Cyclical strategies are popular in computer vision and NLP tasks due to their ability to escape plateaus and improve generalization.

Components of a Learning Rate Strategy

A well-designed learning rate strategy isn’t just about picking a number—it involves understanding how different factors interact throughout the training process. From data complexity to momentum behaviour, each component influences how efficiently a model converges.

These elements become even more important in deep learning, where training dynamics shift rapidly.

Data & Model Factors

The ideal learning rate depends heavily on the dataset and model architecture. Complex datasets with high variability often require smaller learning rates to stabilize training. Larger architectures, especially deep neural networks with millions of parameters, also demand more careful tuning.

In contrast, simpler models with clean, structured data can handle more aggressive step sizes. Understanding these characteristics helps avoid unnecessary instability during training.

Initial Value & Warm-up

The initial learning rate sets the tone for the entire training process. Starting too high may cause the model to diverge before it even begins learning effectively. To address this, many training pipelines use a warm-up phase in which the learning rate gradually increases from a low value to the target rate over a few initial iterations.

This is especially useful for large-scale neural network architectures with learning rates, where early-stage gradients can be noisy and unpredictable.

Decay & Cycles

Once training is underway, planned adjustments help maintain momentum without overshooting. Decay schedules gradually reduce the learning rate as the model approaches convergence.

Meanwhile, cyclical patterns periodically increase it to help the optimizer explore new paths and avoid local minima. Together, these strategies provide a balanced approach that supports both exploration and precise fine-tuning.

Momentum & Batch Size

Momentum interacts closely with the learning rate by controlling how much past gradients influence current updates. Higher momentum can accelerate training but may require a lower learning rate to stay stable.

Batch size also plays a role: larger batches often allow for higher learning rates due to more stable gradient estimates, while smaller batches may need slower step sizes. Tuning these elements together ensures a smoother training curve and more reliable convergence.

You Might Also Like:

Learning Rate Examples and Applications

The impact of the learning rate becomes most clear when applied to real-world systems. Across industries, it shapes how quickly and accurately models learn patterns, adapt to noise, and generalize.

From vision tasks to fraud analytics, each domain benefits from a carefully designed learning rate strategy that balances exploration and stability.

Below are key applications where learning rate tuning plays a central role.

Healthcare

In healthcare models—such as disease prediction, medical imaging, or diagnostic classification—the right learning rate ensures stable convergence even with noisy, imbalanced datasets.

For example, deep learning learning rate strategies help MRI segmentation models avoid overfitting and maintain precision across varying image types. Gradual decay and warm-up phases are commonly used to improve reliability in sensitive predictions.

Trading & Finance

Financial models operate on volatile, non-stationary data, making the stability of the learning rate critical. Algorithms used for price prediction, risk modeling, or anomaly detection rely on adaptive learning-rate ML techniques to respond to shifting trends.

Adaptive optimizers like Adam perform well in these environments because they adapt to noisy gradients and rapidly changing patterns. Proper tuning helps prevent overreaction during market spikes or dips.

Autonomous Vehicles

Autonomous systems depend on learning rate optimization to train perception, navigation, and decision-making models. For vision tasks such as lane detection or obstacle recognition, cyclical learning rates help models generalize better across varied road conditions.

In reinforcement learning components—like path planning—adaptive learning rates ensure controlled updates when reward signals fluctuate.

NLP Transformers

Transformer architectures, widely used for sentiment analysis, translation, and large-scale text modeling, rely heavily on warm-up + decay learning rate schedules. Their training involves enormous parameter counts, making a stable learning rate neural network workflow essential.

The warm-up phase prevents early instability, while decay schedules refine accuracy in later epochs. These patterns support strong generalization on textual datasets.

Vision & Detection

Computer vision tasks—object detection, classification, segmentation—are highly sensitive to the learning rate. Cyclical policies often accelerate convergence while improving robustness.

Models like CNNs and Vision Transformers perform better when training cycles include structured learning rate fluctuations to escape plateaus commonly found in visual feature extraction.

Recommendation Systems

Recommendation engines learn subtle user patterns from sparse data. Learning rate adjustments help them avoid local minima and maintain accuracy as user interactions change.

Adaptive learning rate ML approaches allow these systems to fine-tune embeddings and ranking layers efficiently without manual retuning.

Fraud Detection

Fraud detection relies on detecting rare anomalies within massive datasets. A too-high learning rate can make the model overly sensitive to noise, while a too-low learning rate can slow its ability to recognize new fraud patterns.

Scheduled decay and momentum balancing help maintain stability while adapting to new fraudulent behaviours.

Robotics

Robotic control systems use reinforcement learning and deep neural networks that require precise learning rate control. Cyclical and adaptive policies support stable policy updates, especially in dynamic environments.

These methods enable robots to learn motion control, object handling, and navigation tasks more efficiently.

Strengthen Your ML Models with Expert Tuning

Share your technical requirements and explore how optimized learning rate methods can elevate your training pipeline and output quality.

How to Implement Learning Rate in Machine Learning?

Implementing the learning rate effectively requires more than selecting a number—it involves understanding how the optimizer behaves, how the data influences gradients, and how training evolves.

A well-implemented plan prevents instability, accelerates convergence, and improves the final model’s accuracy.

Below are the practical steps used across learning rate ML workflows, deep learning learning rate pipelines, and various neural network setups.

Choosing a Start Value

Selecting an initial learning rate depends on the model architecture and dataset complexity. A common starting point is 0.001-0.01 for most neural networks, but experimentation is essential. Too high, and the model may diverge immediately; too low, and training becomes inefficient. Many practitioners begin with a slightly conservative value and adjust based on loss curves during the first few epochs. Tools like the learning rate range test can also help identify an appropriate starting point by sweeping values and observing where loss improves.

Using Decay Schedules

Decay schedules help models transition from rapid early learning to precise late-stage tuning. As training progresses, gradually reducing the learning rate improves stability and reduces oscillation around the minimum. Common approaches include:

- Step decay: reduces the rate at fixed intervals

- Exponential decay: decreases continuously based on a decay factor

- Cosine decay: smooth transition with periodic resets in some variations

These schedules support smoother convergence, especially in training setups that involve large datasets or multi-stage pipelines. When working with tasks like image classification or NLP, decay schedules often serve as the backbone of reliable training.

Adaptive Optimisers

Adaptive optimizers such as Adam, RMSProp, and Adagrad automatically adjust learning rates based on gradient patterns. They are particularly effective in deep learning scenarios where the gradient behaviour varies across layers.

These optimizers help handle sparse data, noisy gradients, and shifting loss landscapes. Adam, for instance, adjusts the learning rate for each parameter using first- and second-moment estimates, resulting in faster and more stable learning across complex architectures.

Monitoring & Reducing

Throughout training, ongoing monitoring ensures the learning rate remains aligned with the model’s behaviour. If the loss stagnates, fluctuates heavily, or rises unexpectedly, adjusting the learning rate can resolve the issue. Common monitoring methods include:

- Tracking training vs. validation loss

- Visualizing learning curves

- Using callbacks like ReduceLROnPlateau, which lowers the learning rate when performance stops improving

These techniques help maintain steady progress and prevent wasted computation in later training stages.

How Does BigDataCentric Utilize Learning Rate Optimization in Its Solutions?

BigDataCentric integrates learning rate optimization as a core element of its model development process across domains such as data science, machine learning, business intelligence, and chatbot solutions.

Different projects require different learning rate behaviours, so the approach varies depending on the model type, dataset scale, and task complexity.

For large neural network architectures, structured learning rate schedules are used to maintain stability during early training and improve precision later. Warm-ups, decay strategies, and adaptive optimizers help models converge efficiently without sacrificing accuracy.

In scenarios involving unstructured or high-variance data—such as text, user behaviour logs, or sensor readings—adaptive learning rate methods enable models to adjust quickly while avoiding overfitting.

BigDataCentric also leverages evaluation-driven tuning. Techniques such as loss curve monitoring, ReduceLROnPlateau, and gradient diagnostics guide the selection and adjustment of learning rate schedules throughout training. These practices ensure that models continue to improve while staying robust across real-world conditions.

Internal resources, such as insights on machine learning development services and business intelligence services from BigDataCentric’s existing knowledge base, naturally support these optimization workflows, helping teams select strategies that align with broader solution architectures.

Still Struggling to Improve Your Model’s Training Efficiency?

Our experts can help you apply smarter learning rate techniques to boost accuracy and stability.

A Final Word

The learning rate remains one of the most influential components in shaping how models learn, adapt, and converge. From simple implementations to advanced strategies involving decay cycles, adaptive methods, and meta-learning, its impact spans every stage of training.

Whether you’re working with deep networks, structured data models, or real-time systems, the right learning rate approach can significantly improve both performance and efficiency.

As machine learning continues to advance across industries, understanding and applying the right learning rate strategies becomes essential.

With techniques evolving toward automation, real-time responsiveness, and architecture-aware tuning, the learning rate will continue to play a central role in building reliable, high-performing models.

FAQs

-

How does batch size influence the learning rate?

A larger batch size allows for a slightly higher learning rate because the gradient estimates are more stable. Smaller batch sizes often require a lower learning rate to avoid unstable updates.

-

Is 0.001 a good learning rate?

Yes, 0.001 is widely considered a strong default learning rate and is used as the standard value in many Keras optimizers. Since the learning rate is one of the most crucial hyperparameters, starting with 0.001 is recommended because it offers a balanced, stable pace for most training setups.

-

Can the learning rate change during training?

Yes, learning rates often change during training using schedules (step decay, cosine decay) or adaptive methods. This helps models converge faster and avoid getting stuck.

-

Is a higher learning rate better?

Not necessarily. A higher learning rate may speed up convergence, but can also cause the model to overshoot or bounce around the optimal point. In contrast, a lower learning rate offers more stability but often trains more slowly and risks getting trapped in suboptimal regions.

-

What is the learning rate 2e 5?

A learning rate of 2e-5 (0.00002) is commonly used for fine-tuning transformer-based models, as it provides stable, controlled parameter updates. It serves as a reliable starting point, though adjustments may be required based on training stability or observed performance.

About Author

Jayanti Katariya is the CEO of BigDataCentric, a leading provider of AI, machine learning, data science, and business intelligence solutions. With 18+ years of industry experience, he has been at the forefront of helping businesses unlock growth through data-driven insights. Passionate about developing creative technology solutions from a young age, he pursued an engineering degree to further this interest. Under his leadership, BigDataCentric delivers tailored AI and analytics solutions to optimize business processes. His expertise drives innovation in data science, enabling organizations to make smarter, data-backed decisions.

Table of Contents

ToggleHere's what you will get after submitting your project details:

- A strict non-disclosure policy.

- Get in discuss with our experts.

- Get a free consultation.

- Turn your idea into an exceptional app.

- Suggestions on revenue models & planning.

- No obligation proposal.

- Action plan to start your project.

- We respond to you within 8 hours.

- Detailed articulate email updates within 24 hours.

Our Offices

USA

500 N Michigan Avenue, #600,Chicago IL 60611