How to Use Machine Learning for Streaming Data with Python?

Blog Summary:

In this blog, you’ll learn how to use machine learning for streaming data with Python—from setting up your environment to building real-time ML models. We cover essential Python libraries, step-by-step implementation, and key best practices to ensure your streaming pipelines are scalable, responsive, and production-ready.

In today’s fast-paced digital landscape, data is generated continuously—from financial transactions and smart devices to real-time user interactions. Traditional batch processing methods struggle to keep up with this deluge of live data.

This is where machine learning for streaming data with Python proves to be a game-changer. It allows businesses to react in real time—whether it’s detecting fraud as it occurs, adapting recommendations on-the-fly, or managing industrial sensors in real-world environments.

Unlike static datasets, streaming data is dynamic and ever-changing. This requires models that can learn and adapt continuously without retraining from scratch. ML models on streaming data must handle noise, incomplete data, and concept drift while delivering accurate insights instantly.

Python’s robust ecosystem—combined with tools like River, Scikit-Multiflow, Kafka, and PySpark Streaming—makes it one of the most preferred languages for Python stream data analysis.

Many companies now rely on professional Machine Learning Services to develop and maintain real-time ML pipelines that scale efficiently and adapt quickly to changing data streams.

In this guide, we’ll explore everything from the basics of streaming data to building a real-time machine learning pipeline in Python. Whether you’re new to the topic or looking to level up your real-time data skills, this blog will walk you through setup, libraries, model building, and best practices for using machine learning for streaming data with Python effectively.

Understanding Streaming Data

Streaming data refers to the continuous flow of data generated in real time from various sources such as sensors, user activity logs, financial tickers, or social media feeds. Unlike batch data, which is collected and processed in chunks, streaming data is processed on the fly—requiring systems to ingest, analyze, and act on information in near real-time.

This paradigm shift opens new opportunities across domains like fraud detection, predictive maintenance, dynamic pricing, and smart city infrastructure.

A key characteristic of streaming data is its velocity—data arrives rapidly and often in high volume. This presents challenges around storage, latency, and consistency, especially when working with ML models on streaming data. Moreover, streaming data can be irregular or incomplete, making it essential to build robust systems that can handle noise and missing values gracefully.

Another important concept is the temporal nature of streaming data. Since new information constantly arrives, insights are often time-sensitive, and delayed processing can lead to outdated decisions.

To handle such demands, real-time pipelines must be designed for scalability, fault tolerance, and adaptability. With Python’s growing ecosystem tailored for Python stream data analysis, data practitioners can now easily implement streaming architectures using tools like Apache Kafka, PySpark Streaming, or MQTT brokers—enabling end-to-end streaming workflows integrated with machine learning.

As we proceed, you’ll learn how to build these workflows using some of Python’s most powerful libraries for streaming ML.

Ready to Streamline On-Time Decisions with Python?

Use machine learning for streaming data with Python to build adaptive, high-speed systems that react instantly to evolving data streams.

Why is Machine Learning on Streaming Data Important?

In today’s real-time economy, decisions often need to be made instantly. Whether it’s detecting fraud in banking, adjusting pricing in eCommerce, or responding to live sensor data in manufacturing, traditional batch-based systems fall short.

Machine learning for streaming data with Python enables systems to learn and adapt continuously—empowering businesses to react to new patterns as they emerge. This shift from reactive to proactive intelligence helps reduce risk, improve user experience, and increase operational efficiency.

Streaming ML models provide immediate insights that can be acted upon without delay. For example, ML models on streaming data can trigger real-time alerts when abnormal activity is detected or deliver personalized recommendations during an ongoing user session.

Python’s ecosystem, supporting tools like Kafka, River, and PySpark, makes it easier to integrate these models into production environments. By adopting Python stream data analysis, organizations can maintain a competitive edge in fast-moving industries where every second counts.

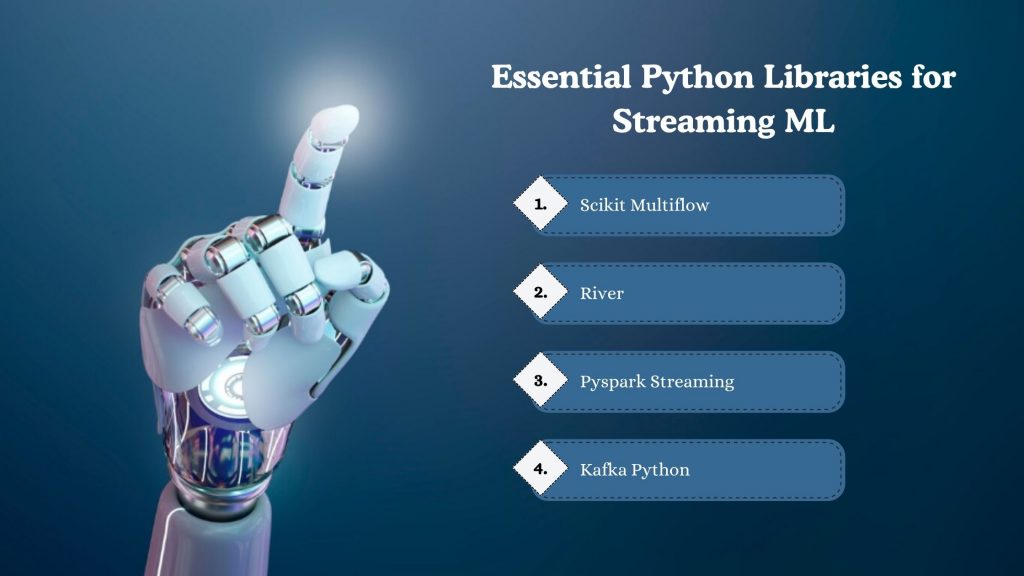

Essential Python Libraries for Streaming Machine Learning

When working with machine learning for streaming data with Python, choosing the right libraries is essential for building scalable, real-time systems. Python offers a diverse set of tools that cater to different aspects of the streaming ML pipeline—from data ingestion and preprocessing to model training and prediction. Each library has its strengths depending on your use case, data scale, and complexity.

Below are four of the most widely used libraries for Python stream data analysis.

Scikit-Multiflow

Scikit-Multiflow is a framework specifically designed for learning from multi-output and data stream environments. Built on top of scikit-learn, it provides support for stream-specific algorithms like Hoeffding Trees, Adaptive Random Forests, and drift detection methods.

It’s ideal for academic and experimental setups, offering extensive control over how models evolve over time with streaming input.

River

River (formerly known as creme) is a lightweight and modern Python library built for online machine learning. It supports incremental learning, where models are updated one sample at a time, making it ideal for streaming scenarios.

River includes preprocessing tools, feature transformation, and various supervised algorithms, making it a comprehensive package for ML models on streaming data with minimal resource consumption.

PySpark Streaming

For large-scale real-time data processing, PySpark Streaming (built on Apache Spark) is a powerful solution. It allows you to create distributed streaming applications that can ingest data from sources like Kafka, Flume, or TCP sockets.

PySpark excels in handling high-throughput scenarios and is widely used in production environments requiring both stream and batch data pipelines.

Kafka Python

Kafka Python is a client library for integrating Apache Kafka into Python applications. While it doesn’t offer ML functionalities directly, it plays a critical role in building real-time data pipelines by acting as a robust message broker.

Combined with libraries like River or PySpark, Kafka Python helps deliver and consume high-velocity streaming data, ensuring that ML components receive timely input for real-time predictions.

Have the Tools—Now Ready to Build?

Leverage Python’s top streaming libraries to implement machine learning for streaming data with Python and bring real-time intelligence to life.

Setting Up your Streaming Data Environment in Python

Before diving into model building, it’s crucial to establish a reliable environment tailored for machine learning for streaming data with Python. A well-structured setup ensures smooth data flow from source to model, handles real-time transformations, and supports continuous training and inference.

In this section, we’ll break down the foundational components required to create a functional and scalable streaming ML pipeline in Python.

Installing Necessary Tools and Libraries

To begin, ensure you have Python 3.7+ installed along with virtual environment tools like venv or conda for isolation. Next, install essential packages using pip:

bash

pip install river scikit-multiflow kafka-python pysparkThese libraries enable you to connect to live data sources, preprocess incoming data, and train ML models on streaming data. If you’re working with Apache Kafka, you’ll also need to set up a local or remote Kafka broker using Docker or a cloud-managed service like Confluent Cloud.

Connecting to Data Sources (Sockets, Kafka, MQTT)

Streaming data can come from various sources depending on your application. For real-time logging or simulated data, you can use socket programming in Python. For high-throughput, distributed applications, Kafka Python is the go-to tool. MQTT is another lightweight option commonly used in IoT scenarios.

python

from kafka import KafkaConsumer

consumer = KafkaConsumer('stream-topic', bootstrap_servers=['localhost:9092'])This snippet connects your Python environment to a Kafka topic—allowing you to consume real-time data seamlessly and pass it to your ML pipeline.

Sample Setup Structure

A basic streaming ML setup in Python typically includes:

- Data Ingestion Layer – e.g., Kafka, MQTT, sockets

- Preprocessing Layer – using River or Scikit-Multiflow

- Model Layer – real-time learning and updating

- Prediction Output – streaming results to dashboards, alerts, or databases

By organizing your environment around these components, you set the stage for a maintainable and scalable Python stream data analysis workflow.

Want to explore how Python connects with Big Data Platforms? Check out our in-depth guide on real-time data processing.

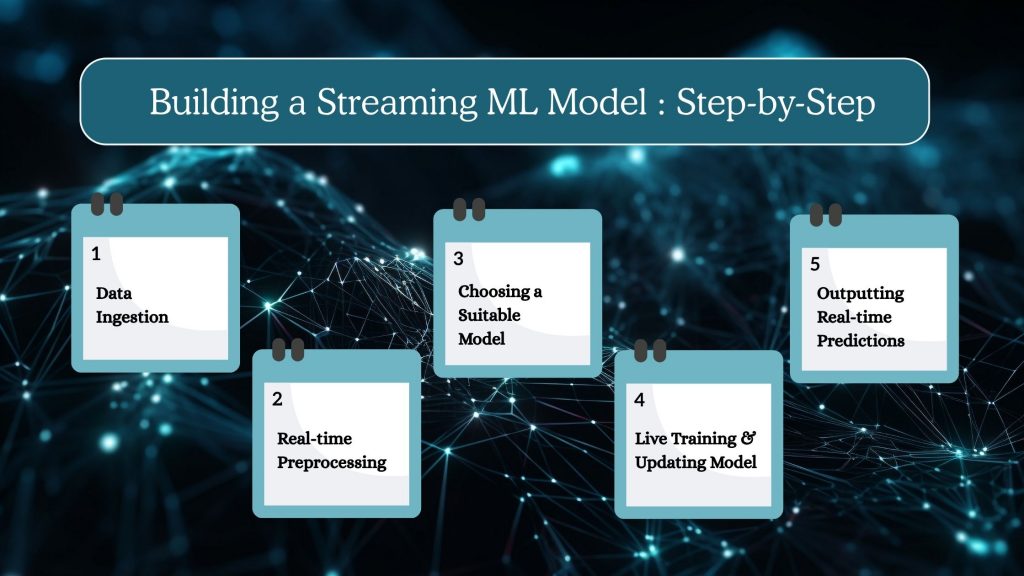

Building a Streaming ML Model: Step-by-Step

Constructing a real-time machine learning pipeline requires careful orchestration of data ingestion, transformation, model training, and continuous predictions. Unlike static models that are trained once and deployed, machine learning for streaming data with Python demands systems that can learn incrementally and adapt to changing data.

Let’s break down the step-by-step process of building a streaming ML model using Python.

Data Ingestion

The first step involves feeding your model with a continuous stream of data. This can come from sources like Kafka topics, socket streams, or MQTT brokers. For example, in a Kafka-based setup, you might consume data using the KafkaConsumer module. Each incoming record is processed in real time and sent downstream for analysis and model updates.

Real-time Preprocessing (Normalization, Handling Missing Data)

As data flows in, preprocessing must occur instantly. Using libraries like River or Scikit-Multiflow, you can normalize features, handle missing values, and apply transformations on-the-fly. This ensures consistency across all data points without needing a separate preprocessing step. For instance, River provides transformers like StandardScaler and Imputer that can be used in streaming pipelines seamlessly.

python

from river import preprocessing

scaler = preprocessing.StandardScaler()This component ensures that even when new data varies from the training distribution, your model remains stable and performant.

Choosing a Suitable Model (e.g., Naive Bayes, Hoeffding Tree)

Not all models work well in streaming scenarios. Online algorithms such as Naive Bayes, Hoeffding Trees, and Adaptive Random Forests are best suited for streaming ML because they learn incrementally. Tools like Scikit-Multiflow and River offer these algorithms, allowing you to train models sample by sample—ideal for ML models on streaming data.

python

from river import naive_bayes

model = naive_bayes.GaussianNB()These models are lightweight and designed to update continuously without retraining from scratch.

Live Training & Updating Model

After preprocessing, each new data point is used to train or update the model. With streaming libraries, the learn_one() method is often used to fit the model on one record at a time. This facilitates real-time learning, making your system adaptive to concept drift and new trends in the data.

python

model.learn_one(X, y)This approach ensures that your model stays fresh and relevant as more data flows in.

Outputting Real-time Predictions

Finally, predictions are generated in real time and routed to downstream systems such as dashboards, alerts, or recommendation engines. For instance, in an eCommerce setup, your model might predict a user’s next likely purchase and update the user interface instantly. River’s predict_one() method is commonly used here to return real-time predictions on each new input.

python

prediction = model.predict_one(X)This completes the cycle—allowing your model to ingest, process, learn, and predict continuously within a real-time loop.

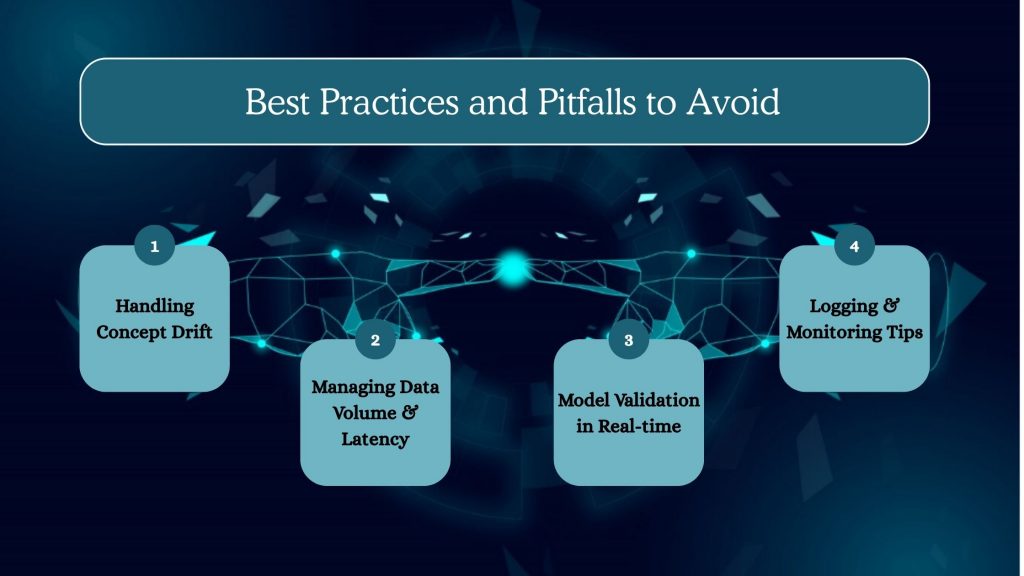

Best Practices and Pitfalls to Avoid

Building effective systems for machine learning for streaming data with Python requires more than just code—it demands thoughtful design, scalability considerations, and the ability to adapt to changing data conditions.

Let’s explore some of the most important best practices and common pitfalls you should keep in mind when deploying streaming ML systems.

Handling Concept Drift

One of the biggest challenges with streaming data is concept drift, where the underlying data patterns evolve over time. A model trained on past data may become obsolete if it doesn’t adapt.

To counter this, use adaptive algorithms like Hoeffding Trees or leverage drift detection mechanisms available in Scikit-Multiflow and River. Regular evaluation with recent data can also help identify performance degradation early.

Managing Data Volume & Latency

Streaming applications often deal with high-velocity data, and poor pipeline design can lead to lag or even system crashes. To manage this, implement data buffering, parallel processing, and efficient serialization formats like Avro or Protobuf. Also, tools like Kafka can help balance Python stream data analysis by queuing data efficiently before it reaches the ML model.

Model Validation in Real-time

In batch ML, you have the luxury of offline validation. But for ML models on streaming data, validation must happen on the fly. One approach is to use interleaved test-then-train, where each new data point is first used to test the model and then to train it. This ensures ongoing evaluation without interrupting the streaming process.

Logging & Monitoring Tips

Logging is vital for tracing model performance and system behavior. Log key metrics like latency, accuracy, data throughput, and drift alerts. Tools like Prometheus, Grafana, or even custom dashboards can help visualize these metrics in real time. It’s also wise to log unusual data inputs that may cause prediction errors, allowing for easier debugging and model retraining.

Pipeline Ready—Want Expert Help Scaling?

We help turn your prototypes into scalable, production-ready systems using machine learning for streaming data with Python.

Conclusion

Streaming data has transformed the way organizations make decisions—shifting from delayed, batch-based insights to real-time intelligence. With the increasing demand for instant analytics and adaptive learning, mastering machine learning for streaming data with Python is not just a niche skill—it’s a necessity.

From fraud detection and predictive maintenance to personalized user experiences, streaming ML unlocks new levels of responsiveness and automation across industries.

Python’s mature ecosystem, including tools like River, Scikit-Multiflow, PySpark, and Kafka, enables developers to build scalable, real-time machine learning pipelines. By understanding the core concepts, best practices, and potential pitfalls covered in this guide, you’re now equipped to begin your journey with Python stream data analysis.

As data continues to flow without pause, your models can now keep pace—learning, predicting, and adapting in real time.

🔗 Looking to implement real-time ML in your business? Connect with BigDataCentric to build customized streaming data solutions that scale.

FAQs

-

How is streaming data different from batch data in ML?

Streaming data is continuously generated and processed in real time, while batch data is collected over time and processed in chunks. Streaming ML requires models that can adapt instantly, whereas batch ML allows for more time-consuming, offline training.

-

How do I manage concept drift in streaming ML models?

Concept drift is handled by using adaptive algorithms that update the model as new data arrives. Techniques include sliding windows, incremental learning, and drift detection methods like ADWIN or DDM to maintain accuracy over time.

-

Is Apache Spark necessary for streaming ML in Python?

Apache Spark is powerful for large-scale streaming data processing, but it’s not strictly necessary. Python offers lighter alternatives like River, Scikit-Multiflow, or Kafka with custom logic for real-time ML on smaller or mid-sized data streams.

-

What does a basic streaming ML architecture in Python look like?

A typical architecture includes a data source (e.g., Kafka, socket), a stream processor (using Python libraries), a live ML model (online learner), and an output layer (dashboard, storage, or alert system). It processes, predicts, and updates in real time.

-

What kind of model updates are supported in live streaming scenarios?

Live streaming scenarios support online learning and incremental updates, where models adjust with each new data point. Algorithms like Hoeffding Trees, Naive Bayes, and adaptive ensembles are commonly used for real-time model updates.

About Author

Jayanti Katariya is the CEO of BigDataCentric, a leading provider of AI, machine learning, data science, and business intelligence solutions. With 18+ years of industry experience, he has been at the forefront of helping businesses unlock growth through data-driven insights. Passionate about developing creative technology solutions from a young age, he pursued an engineering degree to further this interest. Under his leadership, BigDataCentric delivers tailored AI and analytics solutions to optimize business processes. His expertise drives innovation in data science, enabling organizations to make smarter, data-backed decisions.

Table of Contents

ToggleHere's what you will get after submitting your project details:

- A strict non-disclosure policy.

- Get in discuss with our experts.

- Get a free consultation.

- Turn your idea into an exceptional app.

- Suggestions on revenue models & planning.

- No obligation proposal.

- Action plan to start your project.

- We respond to you within 8 hours.

- Detailed articulate email updates within 24 hours.

Our Offices

USA

500 N Michigan Avenue, #600,Chicago IL 60611