Machine Learning Model Management: What it is and How to Implement it?

Blog Summary:

Struggling to handle multiple models, shifting versions, and sudden performance drops in production? This guide explains how Machine Learning Model Management provides structure, traceability, and consistent results throughout the lifecycle. You’ll learn the essential components, methods, and tools needed to keep models reliable at scale.

In today’s data-driven landscape, businesses are not just building predictive models—they are operationalising them. But it’s one thing to train a model and another to manage its lifecycle continuously, especially when scale, accuracy, and governance matter. This is where effective Machine Learning Model Management comes into play.

Whether you’re deploying models across cloud environments or managing dozens of versions in production, having the right framework ensures you deliver consistent, transparent, and trustworthy outcomes.

In this blog, we will explore what model management entails, why it matters now more than ever, the key components required, best-practice methods, comparisons with experiment tracking, implementation steps, and the tools that support the full workflow.

What is Machine Learning Model Management?

Machine Learning Model Management is the structured process of organising, tracking, deploying, and maintaining models throughout their lifecycle. It ensures every version of a model—from initial experimentation to production deployment is documented, governed, and monitored with complete visibility.

Instead of treating models as isolated files, this approach handles them as evolving assets that require continuous oversight. It streamlines how teams move models from research to production by centralising version control, metadata, performance metrics, and deployment history in a single framework.

This prevents confusion around which version is active, how it was trained, or what data was used. With clear workflows in place, organisations can scale ML model management confidently while maintaining accuracy, fairness, and reliability across environments.

Why Does ML Model Management Matter Today?

As organisations rely more on predictive systems, the need to maintain clarity, traceability, and stability across the entire model lifecycle has never been greater. Models that perform well during development often behave differently in real-world environments where data changes constantly.

Without structure, teams face duplicated experiments, inconsistent outputs, unpredictable failures, and compliance challenges that slow down innovation. A well-defined Machine Learning Model Management framework brings control to this growing complexity by standardising workflows, tightening governance, and ensuring every model version can be trusted long after deployment. Working with a machine learning development company can also help teams adopt these practices more effectively and scale them with confidence.

Here are some reasons why it matters –

Ensures Reproducible ML Results

Reproducibility remains one of the biggest challenges in model development. Small changes in datasets, parameters, or environments can produce entirely different outcomes. A structured ML model management framework captures training details, datasets, metadata, and environment configurations to ensure the same results can be recreated at any point.

This clarity helps teams confidently validate outputs, troubleshoot faster, and maintain consistency across experiments and production versions.

Improves Deployment Efficiency

Deployment often slows teams down when dependencies, model files, and environment settings are scattered. With model management, deployments move through standardised workflows where packaging, versioning, and testing follow a repeatable pattern. This reduces errors, accelerates release cycles, and allows teams to deliver updates with minimal downtime.

Tools that support ML model lifecycle management also automate steps such as validation, containerisation, and rollback, making deployment more predictable and scalable.

Enhances Operational Accuracy

Once a model is live, accuracy can fluctuate due to changing user behaviour, seasonal trends, or data drift. A solid management framework enables continuous tracking of predictions, input distributions, latency, and model confidence scores.

Detecting anomalies early helps teams adjust or retrain models before issues affect customers or operations. This leads to more stable business outcomes and reliable performance, even in dynamic environments.

Reduces Model Performance Risks

Unmanaged models introduce risks such as bias, outdated logic, fairness issues, and incorrect predictions. Machine Learning Model Management minimises these risks by monitoring behaviour, tracking drift, maintaining version history, and enforcing approval workflows.

When something goes wrong, teams can quickly trace the root cause—whether it’s training data, code changes, or deployment errors—and take corrective action without guesswork.

Supports Enterprise Compliance

Industries such as finance, healthcare, and insurance require full transparency around how models are trained, why decisions are made, and which data is used. Model management provides an audit-ready trail that includes datasets, experiments, hyperparameters, and deployment versions.

This makes compliance with standards such as GDPR, ISO, and industry regulations manageable rather than overwhelming. It also simplifies internal audits, where explainability, fairness checks, and model lineage are crucial.

Upgrade Your ML Model Management

Share your ML Model Management goals, and our team will build a strong, scalable workflow for your models.

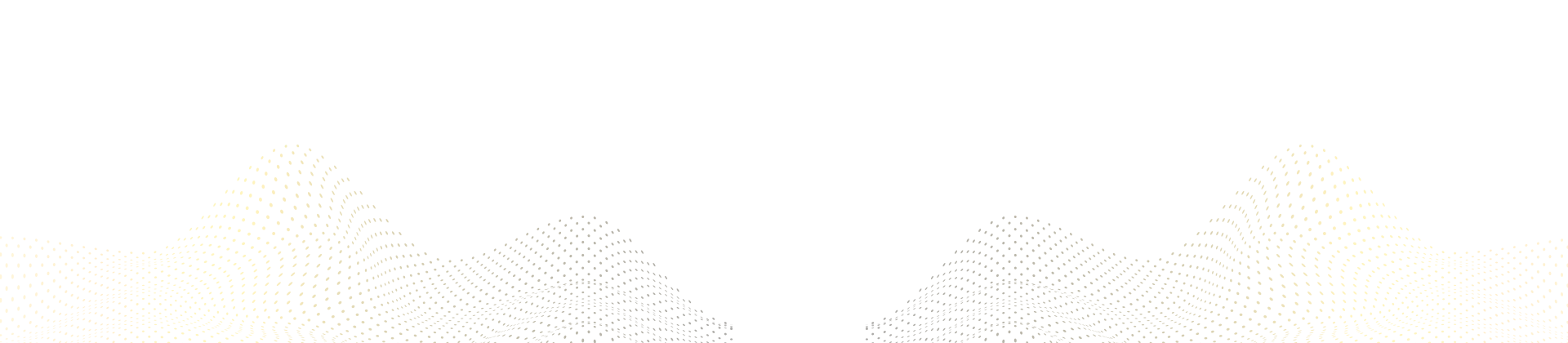

Key Components That Shape a Strong ML Framework

A reliable Machine Learning Model Management framework is built on systems that work together to support the full lifecycle—from initial data processing to deployment and ongoing oversight.

These components ensure teams can scale experiments, maintain clear audit trails, automate repetitive tasks, and maintain consistent model behaviour across environments. When combined, they help create a seamless flow that strengthens accuracy, governance, and operational reliability.

Reinforcement Learning

Reinforcement learning is used in scenarios where models continuously learn from interactions and adapt based on rewards or penalties. Managing such models requires tight control over feedback loops, environment versions, and policy updates to ensure stable decision-making as conditions shift.

Model Registry

A model registry serves as the central source of truth for all model versions, metadata, lineage, and deployment statuses. It helps teams identify which model is in use, track updates, manage approvals, and maintain complete visibility across development and production environments.

Data Ingestion

Reliable data ingestion pipelines ensure that every model is trained and evaluated with accurate, fresh, and consistent datasets. Tracking data sources, transformations, and access patterns becomes essential for reproducibility and compliance, especially when datasets evolve frequently.

Model Monitoring

Model monitoring continuously monitors performance, drift, fairness, latency, and operational health. Effective ML model lifecycle management requires real-time insights to detect issues early, trigger alerts, and support automated retraining or rollback strategies when necessary.

ML Pipeline Orchestrator

An orchestrator automates and coordinates the tasks involved in building, training, validating, and deploying ML models. It reduces manual errors, speeds up development cycles, and ensures processes are executed reliably and repeatably across teams and environments.

Proven Methods to Improve ML Management Outcomes

Strengthening Machine Learning Model Management requires a blend of structured processes, automation, and continuous oversight. As models evolve and production environments shift, these proven practices help teams maintain accuracy.

They also reduce operational friction and ensure every model version remains dependable throughout its lifecycle. Here are some proven outcomes –

Standardize End-to-End Workflows

Consistent workflows reduce ambiguity across teams and environments. By defining how data is prepared, how models are trained, and how deployment decisions are made, organisations can avoid duplication, misalignment, and unpredictable performance variations.

Standardisation also supports smoother collaboration between data science, engineering, and operations.

You Might Also Like:

How to Hire Machine Learning Engineers for Scalable Projects

Robust Deployment Approach

A strong deployment strategy ensures that models transition into production without unexpected failures. Techniques such as canary deployments, shadow testing, and automated rollback mechanisms help validate behaviour before a full release.

These practices increase confidence, minimise downtime, and create safer pathways for frequent updates.

Enforce Access Restrictions

Clear access controls prevent unauthorised changes and maintain integrity throughout the entire ML model management process. Role-based permissions, approval gates, and audit logs ensure that only authorized users can update, deploy, or retire models.

These controls support both operational safety and regulatory requirements.

Track Drift Continuously

Data drift, concept drift, and model degradation can appear subtly over time. Continuous tracking enables teams to detect early shifts in behaviour and performance before they affect outcomes.

Real-time monitoring and periodic evaluations help maintain accuracy and prevent models from becoming outdated.

Data Collection

Ongoing collection of relevant training and feedback data helps models stay aligned with real-world behaviour. Capturing high-quality data along with metadata and contextual signals creates a reliable foundation for retraining, experimentation, and long-term consistency.

Machine Learning Model Management vs Experiment Tracking

Model management and experiment tracking often work side by side but serve different purposes within the ML workflow.

This combined table highlights their focus areas, workflow differences, ideal use cases, and how the two processes complement each other.

| Criteria | Machine Learning Model Management | Experiment Tracking |

|---|---|---|

| Primary Focus | Oversees models after they are selected for production, ensuring stability, governance, and continuous performance. | Captures and compares experiments, metrics, datasets, and hyperparameters during research. |

| Workflow Style | Operational workflows: versioning, deployment, monitoring, auditing, access control, compliance. | Research workflows: iterative experimentation, evaluation, and performance comparison. |

| Best Used When | A model is ready for staging or production and needs controlled updates, tracking, and monitoring. | During early development to evaluate multiple approaches and identify the best-performing model. |

| How They Complement Each Other | Manages the chosen model after experimentation concludes, ensuring reliability at scale. | Helps identify the best candidate model that model management processes will later govern. |

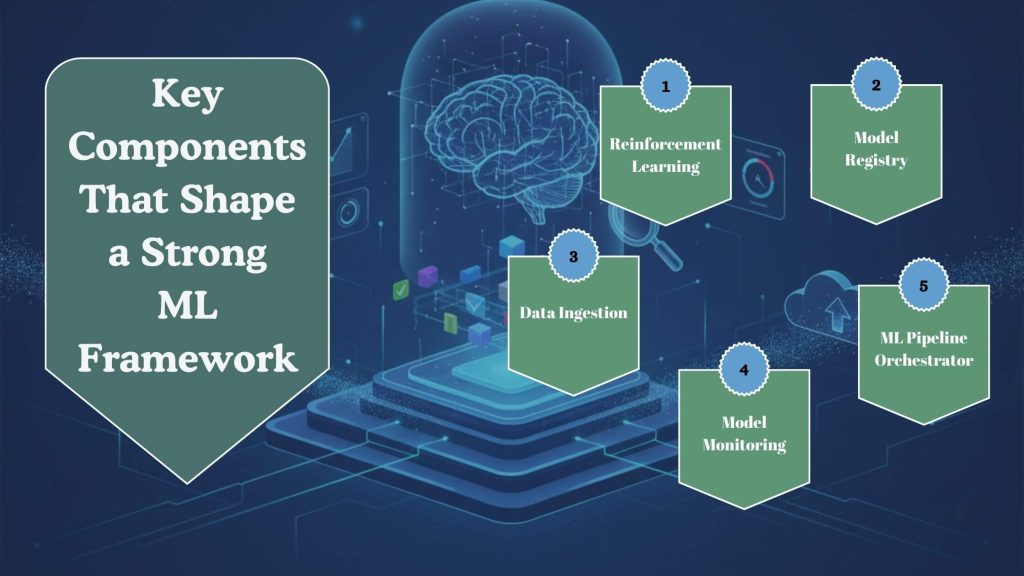

Steps to Implement ML Model Management

Implementing Machine Learning Model Management requires a structured, repeatable framework that brings clarity to every stage—from experimentation to deployment and long-term monitoring.

The goal is to ensure that models remain traceable, high-performing, and aligned with business needs even as data, ML infrastructure, and requirements evolve.

A well-defined process also helps teams reduce operational risks, maintain version visibility, and improve collaboration across data science and engineering functions.

Define Lifecycle Stages Clearly

Start by mapping out each stage of your ml model lifecycle management process, including experimentation, validation, staging, production, and retirement. Clear definitions help teams understand responsibilities, handoff points, and required documentation at each step.

This eliminates confusion and supports predictable movement from one stage to another.

Set Up Version Control

Version control is essential for tracking code, data, models, and configuration changes. By maintaining a detailed lineage of every component, teams can easily roll back to earlier versions, compare performance, or reproduce results.

This prevents version conflicts and maintains full traceability throughout the lifecycle.

Build CI/CD Integrations

Continuous Integration and Continuous Deployment pipelines automate model testing, validation, and promotion to staging or production environments.

CI/CD ensures that updates are consistent, reduces manual errors, and speeds up deployment cycles. Automated checks also help maintain quality as models evolve.

Deploy Monitoring Systems

Monitoring tools keep production models accountable by tracking drift, accuracy, fairness, latency, and operational health. Real-time alerts enable teams to quickly identify issues and trigger retraining, rollbacks, or model substitutions as needed. Strong monitoring is the backbone of long-term model reliability.

Build a Scalable ML Model Framework

Tell us your requirements, and we’ll create a future-ready ML model management pipeline tailored to you.

Tools That Improve Your End-to-End ML Workflow

Choosing the right tools is essential for maintaining visibility, automation, and consistency across every stage of Machine Learning Model Management. These platforms help streamline experimentation, versioning, deployment, and monitoring.

They also reduce manual effort and minimise operational risks. Integrating them into your workflow creates a stronger, more scalable ML foundation.

Neptune.ai

Neptune.ai serves as a central hub for managing model metadata, experiments, and production-level performance insights. With organised tracking and easy collaboration features, it helps teams maintain a clean history of runs, results, and model versions, making long-term management far more structured.

MLflow

MLflow simplifies experiment logging, model packaging, and deployment with its modular components. Its model registry is especially valuable for ml model management, enabling teams to organise versions, track transitions, and maintain full lifecycle visibility.

It integrates well with popular ML libraries and cloud services, making it highly adaptable.

Fiddler

Fiddler focuses on monitoring, explainability, and responsible AI governance. It offers real-time drift detection, bias insights, and performance dashboards to help teams understand how a model behaves in production.

This level of transparency supports both operational accuracy and compliance requirements.

TorchServe

TorchServe provides a streamlined way to deploy PyTorch models at scale. With features such as model versioning, logging, multi-model serving, and auto-batching, it enhances operational efficiency and simplifies production management for organisations that heavily rely on PyTorch-based solutions.

BentoML

BentoML allows teams to package, deploy, and manage models as APIs with a consistent, framework-agnostic workflow. Its flexibility makes it easier to integrate into CI/CD pipelines, automate deployment steps, and maintain reliable production serving across varied infrastructure environments.

How BigDataCentric Supports Advanced ML Workflows?

BigDataCentric helps organisations build stable, scalable, and fully governed machine learning workflows by aligning model development with operational excellence. With expertise across data science, engineering, and lifecycle governance, the team ensures that every model progresses smoothly through each stage.

This structured, transparent process supports a reliable transition from experimentation to production. Their approach focuses on creating end-to-end pipelines that reduce manual overhead, improve reproducibility, and support long-term performance reliability.

By integrating model registries, CI/CD automation, and continuous monitoring systems, BigDataCentric enables smoother transitions between lifecycle stages while maintaining strict version control and compliance.

Their experience in building production-ready analytics systems also helps businesses prevent drift-related issues and keep models aligned with changing real-world conditions.

Whether it’s optimising workflows, modernising existing pipelines, or deploying complex model architectures, BigDataCentric provides the technical foundation needed to strengthen ML model lifecycle management and support future-ready predictive solutions.

Struggling to Maintain Model Accuracy and Stability?

Our experts design an end-to-end Machine Learning Model Management setup to improve monitoring, versioning, and deployment efficiency.

Conclusion

Machine Learning Model Management has become a fundamental requirement for organisations that depend on predictive systems at scale. As models evolve, data shifts, and environments change, the ability to track, monitor, and govern every version becomes essential for maintaining accuracy and trust.

A well-structured framework not only ensures reproducible results but also strengthens deployment workflows, reduces risks, and supports long-term operational stability.

By combining lifecycle clarity, version control, monitoring systems, and the right tools, teams can transform model operations from a fragmented process into a streamlined, reliable ecosystem.

With expert support from partners like BigDataCentric, businesses can build end-to-end workflows that keep their models consistent, transparent, and aligned with real-world needs.

FAQs

-

What are the 4 types of machine learning models?

The four main types are supervised learning, unsupervised learning, semi-supervised learning, and reinforcement learning. Each type handles data differently and is used for specific problem scenarios. These categories guide how models learn patterns and make predictions.

-

Is ChatGPT a machine learning model?

Yes, ChatGPT is a machine learning model based on a large-scale transformer architecture. It is trained on massive text datasets to understand patterns, generate human-like responses, and perform language tasks. It falls under the category of deep learning models.

-

What types of metadata should be stored for ML models?

Key metadata includes model version, training parameters, datasets used, evaluation metrics, environment details, and deployment history. Storing this information ensures traceability, reproducibility, and better lifecycle management. It also supports compliance and audit requirements.

-

How often should ML models be retrained or updated?

Retraining frequency depends on data drift, changes in user behaviour, and performance degradation. Some models need monthly or quarterly updates, while others can run longer with stable data. Regular monitoring helps determine the ideal retraining schedule.

Jayanti Katariya is the CEO of BigDataCentric, a leading provider of AI, machine learning, data science, and business intelligence solutions. With 18+ years of industry experience, he has been at the forefront of helping businesses unlock growth through data-driven insights. Passionate about developing creative technology solutions from a young age, he pursued an engineering degree to further this interest. Under his leadership, BigDataCentric delivers tailored AI and analytics solutions to optimize business processes. His expertise drives innovation in data science, enabling organizations to make smarter, data-backed decisions.

Table of Contents

Toggle- A strict non-disclosure policy.

- Get in discuss with our experts.

- Get a free consultation.

- Turn your idea into an exceptional app.

- Suggestions on revenue models & planning.

- No obligation proposal.

- Action plan to start your project.

- We respond to you within 8 hours.

- Detailed articulate email updates within 24 hours.

USA

500 N Michigan Avenue, #600,Chicago IL 60611