Prompt Engineering vs Fine Tuning: Guide to Choosing the Right Method

Blog Summary:

In this blog, we explore Prompt Engineering vs Fine Tuning and how each approach impacts AI performance. You’ll learn their key differences, advantages, and practical use cases. The guide helps businesses decide which method fits their goals for accuracy, efficiency, and adaptability.

In recent years, large language models (LLMs) have become indispensable across countless domains—from customer support and content creation to legal workflows and healthcare diagnostics. However, optimizing these models to deliver on unique organizational needs requires thoughtful customization.

Two leading approaches—prompt engineering and fine-tuning—offer distinct paths forward, each reflecting different trade-offs in speed, precision, resource investment, and long-term adaptability.

According to a recent AI engineering report, 41% of organizations are fine-tuning the models they use, while 70% incorporate retrieval-augmented generation (RAG) or advanced prompting strategies into their workflows amplifypartners. This underscores that users aren’t choosing between approaches—they’re increasingly combining them to balance flexibility, cost, and performance.

In this blog on Prompt Engineering vs Fine Tuning, we’ll delve into how each method works, highlight their respective advantages, compare them side by side, and provide actionable guidance on when—and how—to choose one approach over the other.

We’ll also demonstrate how BigDataCentric’s tailored data strategies and model customization techniques can efficiently accelerate both your prototyping and production journeys.

Prompt Engineering Explained

Prompt engineering is the process of carefully designing and structuring the input provided to a large language model (LLM) to produce the most accurate, relevant, and context-aware output possible.

Instead of altering the model’s internal parameters or retraining it, prompt engineering focuses entirely on how you communicate with the model.

At its core, prompt engineering is about understanding the model’s strengths, limitations, and tendencies, and then crafting prompts that guide it toward the desired outcome. This may involve:

- Providing clear instructions instead of vague requests.

- Using contextual examples to steer the model’s response style.

- Breaking down complex tasks into step-by-step prompts for better clarity.

- Incorporating few-shot or zero-shot learning techniques, where examples are embedded in the prompt itself to improve output quality.

Because prompt engineering doesn’t require altering the underlying model, it’s often the fastest and most cost-effective method for tailoring responses.

This makes it ideal for experimentation, rapid prototyping, and scenarios where you want to adapt the model for different tasks without significant infrastructure investment.

A practical example is a retail business that wants to generate product descriptions with a consistent tone. Instead of retraining the model on thousands of examples, they could use prompt engineering to provide a structured request—such as specifying tone, length, and style within the input text—ensuring uniform results across different product categories.

Prompt engineering is, in many ways, like learning to “speak the model’s language.” The better you phrase your request, the better the model understands your intent and delivers relevant results.

You Might Also Like

Data Science vs. Software Engineering: Key Differences Explained

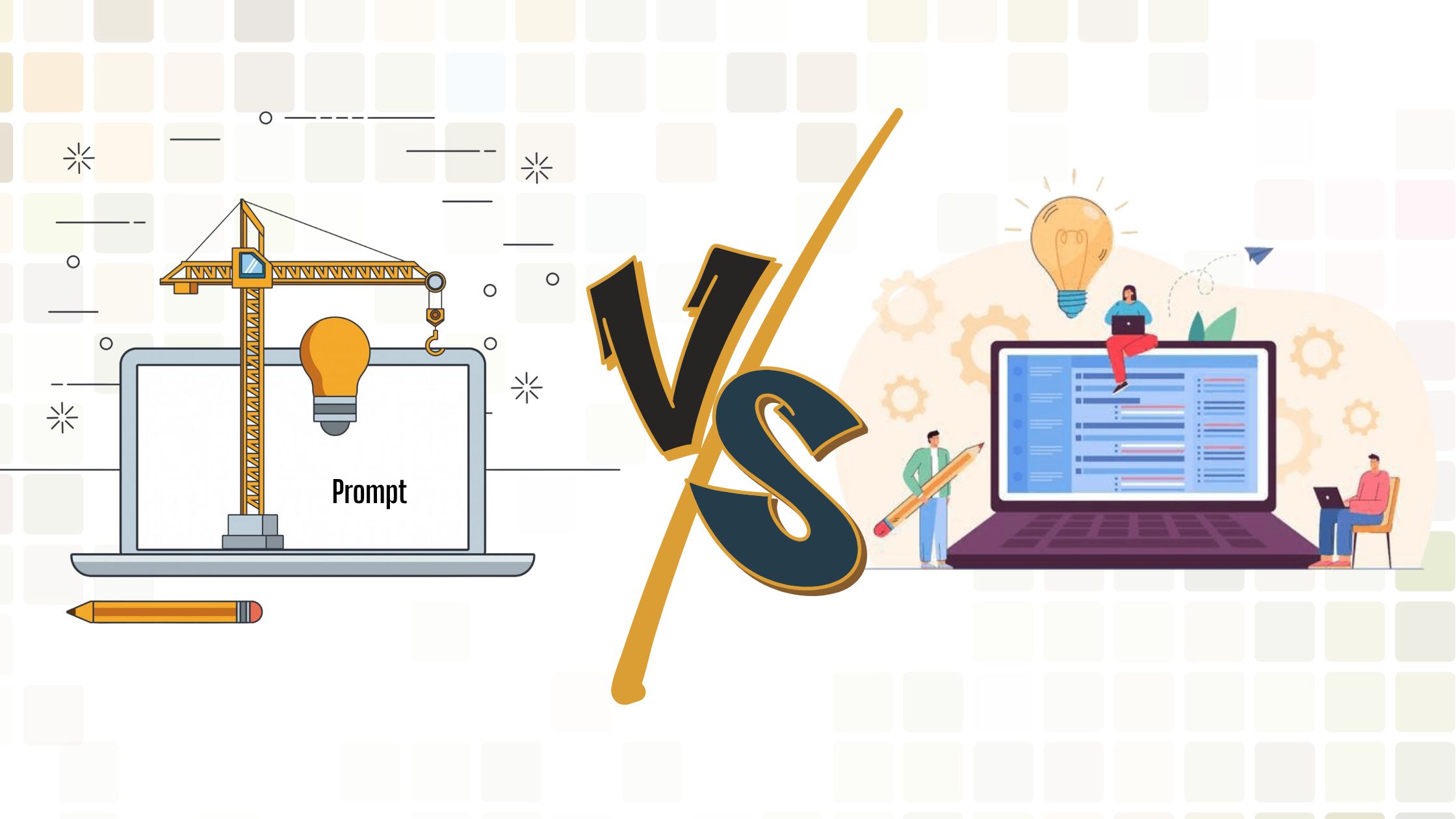

Advantages of Prompt Engineering

Prompt engineering offers a practical and accessible approach to achieving optimal results from large language models, eliminating the need for complex retraining or substantial infrastructure investment. It’s a method that focuses on improving how you communicate with the model, ensuring it understands the intent clearly and responds accordingly. This approach works well across various industries, from customer service to marketing, as it offers speed, cost savings, and adaptability without compromising quality.

Below are the advantages that make prompt engineering a valuable technique for both businesses and developers.

Enhanced AI Interaction and Output Quality

Well-crafted prompts can dramatically improve how an AI model interprets input and generates responses. By providing clear instructions, relevant context, and examples, the model delivers more accurate and targeted results. This minimizes vague answers and reduces the need for back-and-forth clarifications, making interactions smoother and more productive.

Increased Efficiency and Productivity

Prompt engineering enables quick adjustments to model behavior without long retraining cycles. Teams can experiment with different prompt formats, test variations, and deploy improvements rapidly, saving both time and resources. This agility is particularly valuable for projects that require frequent updates or have tight deadlines.

Enhanced User Experience

When prompts are optimized, users receive contextually accurate, relevant, and clear responses. This leads to a more satisfying experience, whether interacting with a chatbot, reading AI-generated content, or utilizing AI tools for analysis. Consistently useful outputs increase trust and encourage wider adoption of AI-powered solutions.

Flexibility and Adaptability

With prompt engineering, a single AI model can be applied to multiple tasks simply by altering the prompt structure. This enables the seamless transition between domains, tones, and formats without altering the underlying model, making the approach highly adaptable to diverse business needs.

Unlock Smarter AI Capabilities

Optimize your AI outputs with prompt engineering and fine tuning to meet your business goals efficiently.

Fine Tuning Explained

Fine-tuning is the process of retraining a pre-trained large language model (LLM) on a specific dataset to adapt it for a particular task, domain, or style. Unlike prompt engineering, which relies on modifying inputs to guide the model’s responses, fine-tuning directly updates the model’s internal parameters. This results in deeper customization and often more consistent performance for specialized needs.

The process typically involves preparing a curated dataset that reflects the desired output, training the model with this data, and validating the results to ensure accuracy. For example, a healthcare company might fine-tune a model using medical records, clinical guidelines, and research papers to provide precise, compliant, and domain-specific responses.

Fine tuning can be performed at different levels—ranging from full model retraining to more resource-efficient techniques like LoRA (Low-Rank Adaptation) or parameter-efficient tuning—which update only specific parts of the model. This enables the balance of customization with computational efficiency.

While fine-tuning offers greater control and reliability for specialized applications, it comes with higher resource requirements, longer implementation time, and the need for high-quality labeled data. However, for industries that demand accuracy, compliance, and consistent performance, it can be the preferred choice over more flexible but less specialized methods, such as prompt engineering.

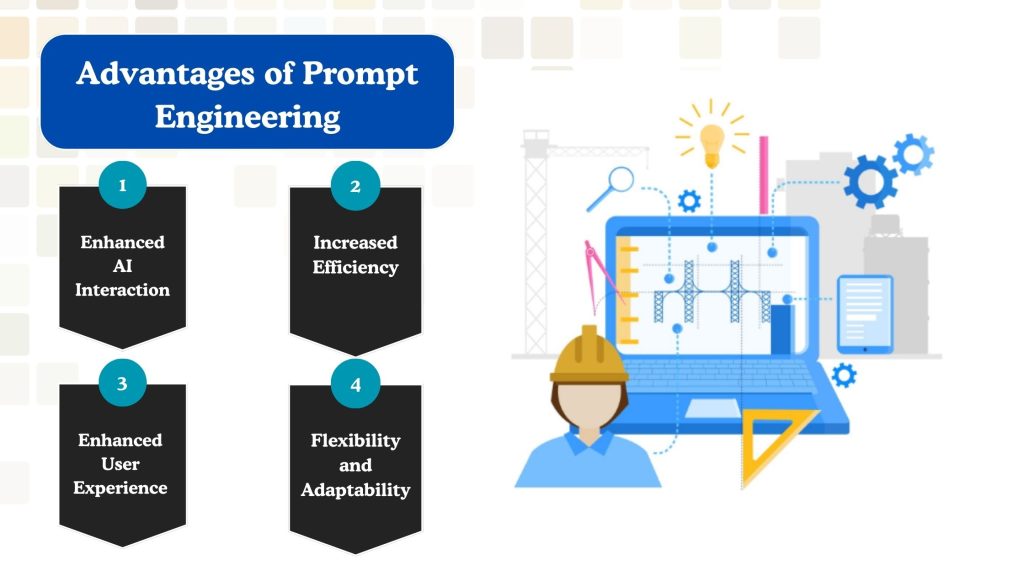

Advantages of Fine-Tuning

Fine-tuning allows organizations to tailor large language models with a high degree of precision, making them better suited for domain-specific or highly specialized use cases. By retraining on relevant datasets, the model learns to deliver consistent, context-aware outputs without needing extensive prompt adjustments. This makes fine-tuning an excellent choice for projects where accuracy, compliance, and performance reliability are critical. Below are the advantages that make fine-tuning a valuable approach for certain scenarios.

You Might Also Like

Improved Accuracy and Relevance

Fine-tuning helps models produce more accurate and relevant results by aligning them closely with domain-specific language, terminology, and workflows. For instance, a financial services model trained on industry regulations and historical data will naturally deliver more precise answers than a general-purpose model.

Reduced Bias

By incorporating balanced, high-quality training data, fine-tuning can help reduce the presence of biases in model outputs. This is particularly important for industries like recruitment, healthcare, and legal services, where impartiality is a key requirement.

Efficient Resource Utilization

Although fine-tuning requires an upfront investment of time and computing power, it can reduce the need for constant prompt experimentation later. Once a model is tuned for a specific domain, it can perform tasks consistently without frequent manual adjustments, saving long-term operational effort.

Improved Generalization

A fine-tuned model not only excels in the specific domain it’s trained for but can also better generalize to related tasks. This makes it a strategic choice for businesses planning to expand their AI applications within a particular industry or subject area, especially when supported by custom AI services.

Prompt Engineering vs Fine-Tuning – Key Differences

While both prompt engineering and fine-tuning aim to improve the performance of large language models, they differ significantly in approach, requirements, cost, and long-term flexibility. The table below outlines the core distinctions to help you quickly compare the two.

| Aspect | Prompt Engineering | Fine Tuning |

|---|---|---|

| Definition | Adjusting the input prompts to guide the model’s responses without changing its underlying parameters. | Retraining the model on a domain-specific dataset to modify its internal parameters for specialized tasks. |

| Data Requirements | Minimal—often no additional training data is required, just well-crafted prompts and examples. | Requires high-quality, labeled domain-specific data for effective customization. |

| Implementation Time | Fast changes can be implemented and tested immediately. | Slower—requires data preparation, training, and validation. |

| Cost | Low—no retraining costs, only prompt design effort. | Higher—requires computational resources, storage, and potentially licensing fees. |

| Flexibility | Highly flexible—can adapt the same model to multiple use cases quickly. | Less flexible—optimized for specific use cases; may require re-tuning for new domains. |

| Use Case Example | A customer service chatbot adapted to handle seasonal promotions by modifying prompt instructions. | A medical diagnostic assistant trained on clinical data for accurate patient recommendations. |

In-depth Analysis of Key Differences

While both approaches aim to optimize the performance of large language models, their impact and application differ significantly. Here’s how Prompt Engineering and Fine Tuning stack up against each other in key areas.

Control Over Output

Prompt Engineering: Offers control by influencing model behavior through input design. You can adjust the tone, style, or focus instantly by modifying the prompt, but control is indirect and can vary depending on the phrasing of the prompt.

Fine Tuning: Provides direct control by embedding knowledge and rules into the model’s parameters. Once tuned, the model consistently follows domain-specific logic without constant prompt adjustments.

Long-Term Adaptability

Prompt Engineering: Highly adaptable—changes can be implemented in real time without retraining. This makes it ideal for fast-changing environments like seasonal marketing or dynamic customer interactions.

Fine Tuning: Less adaptable in the short term because retraining is needed for major changes. However, it offers strong stability when requirements remain constant over time.

Performance Consistency

Prompt Engineering: Performance can fluctuate if prompts aren’t consistently designed. Slight wording changes may impact results, requiring ongoing refinement to maintain quality.

Fine Tuning: Delivers stable, predictable outputs once trained on relevant data, making it suitable for high-stakes scenarios where accuracy must be consistent.

Maintenance Effort

Prompt Engineering: Low maintenance—updating prompts is quick, inexpensive, and doesn’t require specialized ML expertise.

Fine Tuning: Higher maintenance—models may need retraining when data, compliance rules, or domain knowledge changes, which involves more time and cost.

Scalability Across Use Cases

Prompt Engineering: Scales easily across multiple, diverse applications by simply rewriting prompts for each use case.

Fine Tuning: Best suited for scaling within the same specialized domain; adapting it to entirely different tasks may require multiple fine-tuned models.

Enhance Your AI’s Performance Today

From prompt optimization to fine-tuned models, we provide solutions that make your AI more effective and reliable.

When to Choose Prompt Engineering?

Prompt engineering is best suited for situations where flexibility, speed, and low-cost customization are priorities. You should consider this approach when:

- Your requirements change frequently – Perfect for dynamic environments like marketing campaigns, seasonal offers, or fast-evolving customer queries.

- You need quick results – Ideal for projects where timelines are short, and you can’t afford lengthy training cycles.

- You lack domain-specific datasets – Works well when you don’t have the resources or data to train a model from scratch or fine-tune it.

- You want to use one model for many tasks – This allows you to adapt a single model to handle multiple, unrelated use cases simply by modifying the prompts.

- You want to experiment and prototype – this enables rapid testing of different approaches before committing to more complex customizations.

In short, choose prompt engineering when your goal is to quickly adapt and experiment with LLMs without heavy infrastructure investment or technical overhead.

When to Choose Fine-Tuning?

Fine-tuning is the right choice when your project demands high accuracy, domain-specific expertise, and consistent performance over time. You should consider this approach when:

- Your use case is highly specialized – Ideal for industries like healthcare, finance, or legal, where the model must understand technical terminology and context deeply.

- You have access to high-quality, domain-specific data – Fine-tuning thrives when fed with curated datasets that reflect the desired output style and knowledge.

- You need long-term consistency – Perfect for applications where responses must remain stable and predictable regardless of prompt variations.

- Compliance and accuracy are critical – Best for regulated sectors where errors can have significant consequences.

- You want to reduce manual prompt adjustments – Once fine-tuned, the model requires minimal prompting to deliver optimal results.

In short, choose fine-tuning when precision, reliability, and domain expertise outweigh the need for short-term adaptability.

How to Decide the Best Approach for Your Needs

Choosing between prompt engineering and fine-tuning starts with understanding your project’s priorities, resources, and constraints. Begin by assessing the complexity of your use case—if it requires deep domain expertise and consistent accuracy, fine-tuning may be the better fit. However, if you need speed, adaptability, and lower costs, prompt engineering is often the smarter choice.

Next, evaluate your data availability. Fine-tuning relies on high-quality, domain-specific datasets, while prompt engineering can work effectively with minimal or no additional data. Consider your budget and infrastructure as well—fine-tuning requires more computing power and training time, whereas prompt engineering can be executed quickly without specialized infrastructure.

Finally, think about long-term scalability. If your needs are likely to evolve and span multiple domains, prompt engineering offers greater flexibility. If your work will remain within a specific field for the foreseeable future, investing in fine-tuning can pay off with improved accuracy and reduced manual adjustments.

The key is to match the method to your goals, rather than trying to make one approach fit every situation. In some cases, a hybrid strategy—utilizing prompt engineering for rapid adaptation and fine-tuning for core, specialized tasks—can yield the best results.

Future Outlook for Both Methods

Both prompt engineering and fine tuning are expected to remain essential in the evolving landscape of large language models, but their roles will likely become more complementary over time. As LLMs become more capable, prompt engineering will benefit from enhanced natural language understanding, making it easier to achieve high-quality results with fewer trials and errors. This will enhance its appeal for rapid prototyping, multi-domain use cases, and scenarios where agility is most crucial.

Fine tuning, on the other hand, is poised to gain from advancements in parameter-efficient tuning and transfer learning, which will reduce computational costs while retaining precision. Industries with strict compliance standards—such as healthcare, finance, and law—will continue to rely heavily on fine-tuning to meet their accuracy and consistency requirements.

A likely future trend is the rise of hybrid approaches, where organizations use prompt engineering for experimentation and adaptability, and fine-tuning for mission-critical, high-accuracy applications. This combination will enable businesses to balance cost, speed, and performance, making both methods equally valuable in the AI ecosystem.

Use BigDataCentric to Unlock the Full Potential of Prompt Engineering

At BigDataCentric, we help businesses leverage both prompt engineering and fine tuning to achieve tailored, high-performing AI solutions. Our team combines deep expertise in natural language processing, model optimization, and domain-specific knowledge to ensure your AI delivers consistent, accurate, and context-aware results.

Whether you need quick deployment through advanced prompt design or long-term scalability via fine-tuned models, we provide the strategy and execution to match your goals.

We work closely with you to understand your use case, evaluate data requirements, and implement the most effective approach—sometimes combining both methods for maximum impact. By partnering with BigDataCentric, you not only get technical excellence but also a results-driven roadmap that aligns AI capabilities with your business objectives.

Need AI That Truly Understands Your Business?

Leverage our expertise in prompt engineering and fine tuning to get consistent, context-aware AI results for your organization.

Conclusion

Both prompt engineering and fine tuning are powerful methods for shaping AI performance, each with distinct strengths depending on the situation. Prompt engineering offers flexibility, quick implementation, and lower costs, making it ideal for rapid experimentation or evolving requirements. Fine tuning, on the other hand, delivers long-term consistency, higher accuracy, and optimized performance for specialized use cases.

The key is to evaluate your business goals, available resources, and desired outcomes before choosing between the two—or even combining them for a hybrid approach. By understanding the nuances of Prompt Engineering vs Fine Tuning, businesses can unlock smarter, more efficient AI-driven solutions. Partnering with an expert like BigDataCentric ensures you not only make the right choice but also maximize the value of your AI investment.

FAQs

-

How much data is required for fine tuning?

Fine tuning typically requires a high-quality, labeled dataset relevant to your domain, whereas prompt engineering often requires little to no additional data.

-

Can I use prompt engineering and fine tuning together?

Yes. Many organizations combine prompt engineering for rapid experimentation with fine tuning for critical, specialized tasks to get the best of both worlds.

-

Is it possible to fine-tune a pre-trained model without extensive coding knowledge?

Yes, with modern AI tools and platforms, it’s possible to fine-tune pre-trained models using user-friendly interfaces, although having some coding knowledge can be beneficial for deeper customization.

-

Can I modify a fine-tuned model later if my requirements change?

Yes, fine-tuned models can be further retrained or adjusted to accommodate new needs, although it may require additional computational resources.

-

How long does it take to retrain a model through fine tuning?

The time required for fine tuning depends on factors like the size of the dataset, model complexity, and computational power. It can range from a few hours to several days.

Jayanti Katariya is the CEO of BigDataCentric, a leading provider of AI, machine learning, data science, and business intelligence solutions. With 18+ years of industry experience, he has been at the forefront of helping businesses unlock growth through data-driven insights. Passionate about developing creative technology solutions from a young age, he pursued an engineering degree to further this interest. Under his leadership, BigDataCentric delivers tailored AI and analytics solutions to optimize business processes. His expertise drives innovation in data science, enabling organizations to make smarter, data-backed decisions.

Table of Contents

Toggle- A strict non-disclosure policy.

- Get in discuss with our experts.

- Get a free consultation.

- Turn your idea into an exceptional app.

- Suggestions on revenue models & planning.

- No obligation proposal.

- Action plan to start your project.

- We respond to you within 8 hours.

- Detailed articulate email updates within 24 hours.

USA

500 N Michigan Avenue, #600,Chicago IL 60611