The Complete Guide on How to Build an LLM for Creating Your Own Model

Blog Summary:

This blog breaks down the complete process for building an LLM, from understanding its architecture to training, fine-tuning, and deployment. It explains key stages like data preparation, tokenization, model selection, and retrieval integration. The content also covers optimization, scaling, and performance evaluation for real-world applications. With the right strategy and domain-focused datasets, organizations can build their own LLM tailored to specific needs. BigDataCentric further supports this journey with expertise in model development, training, and enterprise-ready implementation.

Understanding How to build an LLM has become essential as more businesses explore custom models tailored to their data, workflows, and privacy requirements. Modern language models can power chatbots, automate content processes, enhance search systems, and support decision-making across industries.

Creating one involves defining the model’s purpose, preparing the appropriate dataset, selecting an architecture, and training it on scalable compute resources. Whether the goal is to build your own LLM or refine a custom LLM model for domain-specific tasks, following a structured process ensures better performance and reliability.

With well-planned stages—from data collection to deployment—organizations can build their own large language model that fits unique operational needs while maintaining scalability and control. The next sections break down each step involved in this development journey.

How Does an LLM Work?

Large language models learn patterns, relationships, and context from massive text datasets. They convert text into tokens, process them through deep neural networks, and predict the most likely next output based on their probabilities.

Understanding how these core components work is essential before exploring how to build an LLM effectively –

Transformer Architecture

Transformers are the backbone of modern LLMs. They process information in parallel rather than sequentially, making them significantly faster and more scalable than older architectures such as RNNs or LSTMs.

Their ability to capture long-range dependencies in text enables them to understand complex prompts and generate coherent responses. The architecture is built using multiple layers of attention blocks, feed-forward networks, and normalization components that help the model learn contextual meaning at scale.

Self-attention Mechanism

Self-attention allows an LLM to evaluate the importance of each word relative to others in a sentence. Instead of treating all tokens equally, the model assigns attention scores that determine how strongly one token influences another.

This mechanism helps the model capture nuanced relationships, such as tone, context shifts, and multi-step reasoning. It also improves the model’s ability to handle longer sequences and generate more accurate outputs.

Role of Tokenization

Tokenization transforms raw text into smaller units, such as bytes, characters, subwords, or full words. Most LLMs use subword tokenization (e.g., BPE or WordPiece) to efficiently represent vocabulary while reducing ambiguity.

A good tokenization strategy ensures that rare words, multilingual text, and domain-specific terminology are represented accurately. It also directly influences vocabulary size, embedding efficiency, and training stability.

Training vs Inference

Training is the phase in which the model learns from large datasets using objectives such as next-token prediction or masked token learning. It requires significant computing resources, optimized hyperparameters, and distributed training strategies.

Inference, on the other hand, is the process of generating outputs using the trained model. During inference, techniques such as quantization, caching, and optimized batching help reduce latency and improve response time. Balancing both stages is key for anyone aiming to build LLMS from scratch or deploy it at scale.

You Might Also Like:

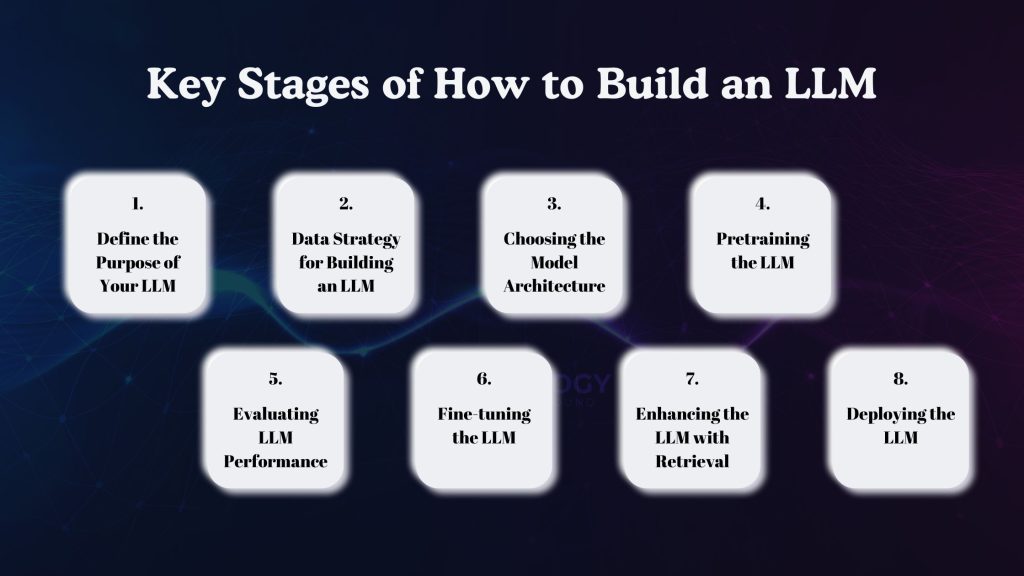

Key Stages of How to Build an LLM

Building a large language model is a multi-stage journey. Below are the essential steps that help you go from concept to a working LLM — whether your goal is to build a base model or a specialized one.

Define the Purpose of Your LLM

Before doing anything else, you must clearly define why you are building the model. That purpose will guide decisions about data, architecture, computing resources, and evaluation criteria.

-

Target Tasks and Use Cases

Decide whether the LLM is meant for general-purpose language generation or for more specific tasks like summarization, question-answering, domain-specific content generation, or chatbot responses.

For some applications — for example, legal document analysis or customer support — you might want a model trained mostly on domain-specific data. For others, such as general content generation or multilingual support, a broader dataset and a more general architecture may be more appropriate.

-

Supported Languages and Domains

If your LLM must work across multiple languages or specialized domains (e.g., medical, technical, legal), define these early. This affects your data sourcing, tokenization/vocabulary design, and ultimately how well your model handles domain-specific jargon, named entities, multilingual syntax, and semantics.

-

Performance Goals and Benchmarks

Set clear expectations — for instance, how accurate its answers should be, how fluent, how fast, how robust to ambiguous queries. Benchmarking criteria might include perplexity on a held-out set, task-specific accuracy (e.g., summarization quality or QA accuracy), or inference latency.

Having measurable goals makes it easier later to evaluate whether your model meets requirements or needs more fine-tuning or data.

-

Model Size Planning

Based on tasks, languages, and performance goals, estimate the model’s size (number of parameters, depth, etc.). A small- to medium-sized model may be enough for specialized tasks or narrow domains.

In contrast, a general, high-capacity LLM may require billions of parameters to achieve broad language coverage and handle diverse tasks. Size planning impacts compute resource needs, training time, and infrastructure requirements.

Data Strategy for Building an LLM

A strong data strategy is the foundation for a high-quality LLM. The quality, diversity, cleanliness, and structure of the data will heavily influence how well the model learns language structure and domain knowledge, and how generalizable or specialized it becomes.

-

Data Collection Sources

Gather data from multiple relevant sources — public-domain text corpora, web-scraped content, proprietary documents, domain-specific datasets, multilingual text sources, etc.

For a general-purpose LLM, broad and diverse data works; for a domain-specific model, you may combine public data with your private or specialized datasets. Diversification ensures the model sees a wide variety of language styles, contexts, and vocabulary.

-

Data Cleaning and Preprocessing

Raw text data often contains noise: HTML tags, formatting artifacts, duplicates, encoding issues, or irrelevant content. These must be cleaned out. Preprocess text by normalizing it with consistent encoding, adjusting whitespace, and removing duplicates or low-quality content.

You should also strip non-textual noise, such as HTML tags or malformed characters, and standardize the overall formatting.Clean and consistent data helps the model learn better language patterns and reduces the risk of garbage output. Amazon Web Services, Inc.+1

-

Tokenization Setup

Design a tokenization and vocabulary strategy suitable for your data’s language(s) and domain. Using subword tokenization (such as BPE, WordPiece or similar) helps represent both common and rare words efficiently, capturing domain-specific vocabulary or multilingual text without exploding vocabulary size.

This balances model flexibility with manageable embedding sizes. Wikipedia+1

-

Dataset Structuring

Organize data into training, validation, and test splits. Ensure that the test set represents realistic or expected use-cases so you can reliably evaluate model performance later.

For domain-specific tasks, include samples covering edge cases, rare vocabulary, and boundary conditions. A carefully structured dataset helps prevent overfitting and ensures generalization.

-

Bias Handling

When using real-world or web-sourced text, data may contain biases, toxic language, or harmful content. Include filtering or annotation steps to remove or flag such content.

Consider balancing representation across languages, styles, demographics, or topics to reduce bias. This helps produce a fairer, safer, and robust LLM.

Your LLM Idea, Our Expertise

From architecture to fine-tuning, we support every step of building a custom LLM tailored to your workflows, domain, and performance needs.

Choosing the Model Architecture

Once purpose and data are defined, choose the architecture that best suits your goals. Architectural decisions have long-term implications on model capability, resource needs, and adaptability.

-

Decoder-only Architecture

A decoder-only transformer (like many autoregressive LLMs) predicts the next token given previous tokens. This architecture excels for tasks such as language generation, completion, and dialogue. It is simpler, faster at inference, and more suitable when the focus is on output generation rather than encoding complex inputs.

-

Encoder-decoder Architecture

An encoder-decoder (aka seq2seq) setup — where the input is encoded into a context representation and then decoded into an output — often serves tasks such as translation, summarization, or structured output.

This architecture can be more versatile when you need to transform or map input text to a different output, rather than just continuing text generation.

-

Layers and Parameters

Decide on the number of transformer layers, hidden size, number of attention heads, embedding dimension, and other architecture hyperparameters. A deeper or wider design allows more capacity to learn complex language patterns or multi-domain knowledge — but also demands more compute and memory.

Choosing parameters is a trade-off: more capacity increases potential, but also resource cost.

-

Vocabulary and Embedding Design

Based on your tokenization strategy and the languages/domains you support, determine vocabulary size and embedding design. Embeddings need to capture semantic and syntactic relationships; careful design helps the model handle domain-specific terms, multilingual inputs, and rare vocabulary without sacrificing general language understanding.

-

Choosing Frameworks and Tools

Select the libraries, frameworks, or toolkits for model development and training — for example, deep-learning frameworks like PyTorch or TensorFlow, tokenizer libraries, distributed training frameworks, dataset pipelines, data loaders, and tooling for evaluation and deployment. These choices influence scalability, ease of experimentation, and resource optimization.

Pretraining the LLM

Pretraining teaches the model the fundamentals of language by exposing it to large-scale text data. At this stage, the LLM learns grammar, semantic patterns, relationships, and context before being adapted to specific use cases. A strong pretraining setup ensures the model understands language well enough to perform downstream tasks effectively.

-

Training Objectives

Training objectives define what the model is trying to learn. Most LLMs use next-token prediction, helping them understand context and generate fluent text. Encoder–decoder setups may use masked or sequence-to-sequence objectives for better comprehension.

Choosing the right objective ensures smoother learning and stable behavior during training.

-

Setting Hyperparameters

Hyperparameters shape how the model learns. Learning rate, batch size, warm-up steps, and optimizer settings directly affect training stability.

A well-tuned combination prevents divergence, speeds convergence, and ensures efficient compute use. Regular monitoring helps catch issues early and refine training performance.

-

Distributed Training Setup

Large LLMs require multiple GPUs or nodes to train efficiently. Distributed training splits workloads using data, tensor, or pipeline parallelism to handle massive parameter sizes.

Tools like DeepSpeed and Megatron-LM make scaling easier while maintaining accuracy. Proper distribution significantly reduces training time.

-

Managing GPU/TPU Resources

Efficient resource management is essential for large-scale training. Techniques like mixed-precision, gradient checkpointing, and activation sharding optimize memory and computation.

GPUs offer flexibility, while TPUs provide high throughput for bigger workloads. Good monitoring ensures peak performance across devices.

Evaluating LLM Performance

Model evaluation ensures the LLM is learning correctly and performing as expected across different tasks. This stage helps identify issues such as poor generalization, bias, weaknesses in reasoning, and gaps in domain understanding. A well-structured evaluation process guides further tuning and ensures reliability before deployment.

-

Perplexity and Loss

Perplexity measures how well the model predicts text, with lower values indicating better predictive performance. Loss provides a direct signal of how training is progressing.

Monitoring both helps confirm whether the model is learning efficiently or overfitting. Stable downward trends generally reflect healthy training behavior.

-

Task Evaluation

Beyond generic metrics, the model must be evaluated on real tasks such as summarization, Q&A, text classification, and generation quality. Using domain-specific benchmarks ensures the LLM aligns with intended use cases.

Human evaluation may also be included for coherence, relevance, and accuracy. Together, these measures reflect actual performance.

-

Safety, Bias, and Robustness Testing

LLMs must be tested for harmful outputs, demographic bias, misinformation, and vulnerability to prompt manipulation. This involves curated test sets, adversarial prompts, and red-teaming techniques.

Ensuring consistent and safe behavior protects users and maintains trust. Robustness testing also helps identify weaknesses before deployment.

Fine-tuning the LLM

Fine-tuning adapts a pretrained model to specific tasks, industries, or datasets. It requires less data and computational resources than pretraining, making it practical for organizations seeking targeted performance improvements.

While prompt engineering guides the model with smarter instructions, fine-tuning actually updates its internal behavior to achieve deeper accuracy and consistency. This step helps the LLM better understand domain-specific language, terminology, and context.

-

Fine-tuning vs PEFT

Traditional fine-tuning updates most model parameters, which can be costly and resource-heavy. PEFT (Parameter-Efficient Fine-Tuning) focuses only on small, trainable components while keeping the main model frozen. This reduces compute usage and training time while achieving comparable performance. PEFT is ideal for rapid and scalable customization.

-

LoRA and QLoRA Methods

LoRA injects low-rank adaptor layers into the model, enabling efficient fine-tuning without modifying core weights. QLoRA goes further by using 4-bit quantization to reduce memory needs during training.

Both methods enable fine-tuning large models on smaller GPUs while maintaining high-quality results.

-

Domain Datasets

For specialized tasks, curated domain datasets are essential. These may include legal documents, medical texts, financial reports, customer conversations, or technical manuals.

Clean, task-aligned data ensures the LLM learns the right vocabulary, tone, and reasoning patterns. High-quality domain input directly improves output accuracy.

Build Your Own LLM with Confidence

Work with experts who understand every layer of LLM design—from data strategy to deployment—so your model is powerful, accurate, and reliable.

Enhancing the LLM With Retrieval

Retrieval enhances an LLM by giving it access to updated or external knowledge during inference.

Instead of generating answers solely from its internal parameters, the model retrieves relevant context from a knowledge source, resulting in more accurate, factual, and current outputs.

-

RAG Architecture Overview

RAG (Retrieval-Augmented Generation) combines an LLM with a retrieval layer. When a question is asked, the system fetches relevant documents, and the LLM generates responses using both the prompt and retrieved context.

This hybrid approach reduces hallucinations and expands the model’s effective knowledge base.

-

Vector Database

A vector database stores document embeddings, making it easy to find semantically similar content. Tools like Pinecone, FAISS, and Milvus enable fast, scalable similarity search.

The LLM retrieves the closest matches to the user query, ensuring more relevant context is supplied during generation.

-

Context Injection

After retrieval, relevant text is injected into the prompt for the LLM to use during inference. This ensures the model produces grounded, up-to-date answers.

Context injection is crucial in knowledge-intensive domains such as finance, law, and research, where accuracy matters.

Deploying the LLM

Deployment ensures the model is accessible via an API, an application, or an internal system. The process involves selecting infrastructure, optimizing performance, and setting up monitoring pipelines. A strong deployment setup ensures stability, scalability, and cost efficiency.

-

Cloud vs On-prem Deployment

Cloud deployment offers scalability, managed GPU infrastructure, and quick setup. On-prem deployment provides more data control, privacy, and compliance—ideal for sensitive industries. The choice depends on data security needs, budget, and operational flexibility.

-

Inference Optimization Techniques

Inference can be optimized through quantization, model distillation, batching, caching, and efficient runtime frameworks. These techniques reduce latency and cost, especially for high-traffic applications. Optimization ensures the model responds fast without compromising quality.

-

Scaling & Monitoring

As usage grows, the model must handle more requests reliably. Autoscaling, load balancing, and health monitoring keep the system performing steadily. Tracking metrics such as latency, memory usage, accuracy, and token throughput helps maintain operational health and user satisfaction.

-

Version Control and Continuous Updates

Versioning ensures smooth updates and rollback capabilities. It tracks model iterations, fine-tuned variants, and configuration changes. Continuous updates allow improvements in accuracy, safety, and domain relevance while maintaining consistency across deployments.

How Does BigDataCentric Help You Build an LLM?

BigDataCentric guides businesses through every stage of developing a custom language model — from defining goals to deploying a production-ready system. The team helps identify the right model architecture, prepares high-quality domain datasets, and sets up efficient training pipelines.

With expertise in machine learning development, data science, and workflow automation, BigDataCentric ensures the model aligns with your operational and performance requirements.

Beyond development, BigDataCentric supports optimization, fine-tuning, monitoring, and long-term scaling. Their experience in chatbot development, predictive systems, and enterprise intelligence helps create models that deliver accurate outputs and remain reliable in real-world use.

By combining technical depth with strong implementation support, BigDataCentric makes the entire LLM-building process smooth, secure, and business-ready.

Need Help Building an LLM?

Whether it’s planning, dataset preparation, or optimizing performance, our experts guide you at every step to create a model that truly delivers.

Conclusion

Building a large language model involves careful planning, appropriate architectural choices, high-quality datasets, and an optimized training and deployment workflow. Every step—from defining the model’s purpose to fine-tuning, retrieval integration, and monitoring—shapes how well the LLM performs in real applications.

With the right approach, organizations can build models that understand context, adapt to domain needs, and deliver reliable results.

As more industries lean toward automation and intelligent systems, knowing how to build an LLM becomes a strategic capability. Whether you aim to build your own large language model or optimize an existing one, combining strong technical foundations with domain-focused data ensures long-term success.

With the right support and infrastructure, you can create scalable, secure, and high-performing LLM solutions tailored to your business goals.

FAQs

-

Can I build my own LLM model?

Yes, you can build your own LLM by preparing a large dataset, choosing the right architecture, and training it with sufficient compute resources. Smaller, domain-specific LLMs are even more achievable with fine-tuning and PEFT methods.

-

Is ChatGPT NLP or LLM?

ChatGPT is an LLM built using modern NLP techniques. It performs NLP tasks, but its underlying architecture is a large transformer-based language model.

-

Can you run an LLM locally?

Yes, lightweight and quantized models can run locally on consumer GPUs or even CPUs. Larger models may require high-end hardware, but tools like QLoRA and 4-bit quantization make local deployment easier.

-

What is the difference between LLM and MLS?

An LLM is a large language model designed for text understanding and generation. MLS typically refers to machine learning systems, a broader category that includes models beyond language tasks, such as vision, prediction, and classification.

-

How do I ensure data quality when building an LLM?

Ensure data quality by removing noise, duplicates, and irrelevant content while standardizing formatting. Use clean, diverse, and well-structured datasets to improve model accuracy and reduce bias.

About Author

Jayanti Katariya is the CEO of BigDataCentric, a leading provider of AI, machine learning, data science, and business intelligence solutions. With 18+ years of industry experience, he has been at the forefront of helping businesses unlock growth through data-driven insights. Passionate about developing creative technology solutions from a young age, he pursued an engineering degree to further this interest. Under his leadership, BigDataCentric delivers tailored AI and analytics solutions to optimize business processes. His expertise drives innovation in data science, enabling organizations to make smarter, data-backed decisions.

Table of Contents

ToggleHere's what you will get after submitting your project details:

- A strict non-disclosure policy.

- Get in discuss with our experts.

- Get a free consultation.

- Turn your idea into an exceptional app.

- Suggestions on revenue models & planning.

- No obligation proposal.

- Action plan to start your project.

- We respond to you within 8 hours.

- Detailed articulate email updates within 24 hours.

Our Offices

USA

500 N Michigan Avenue, #600,Chicago IL 60611